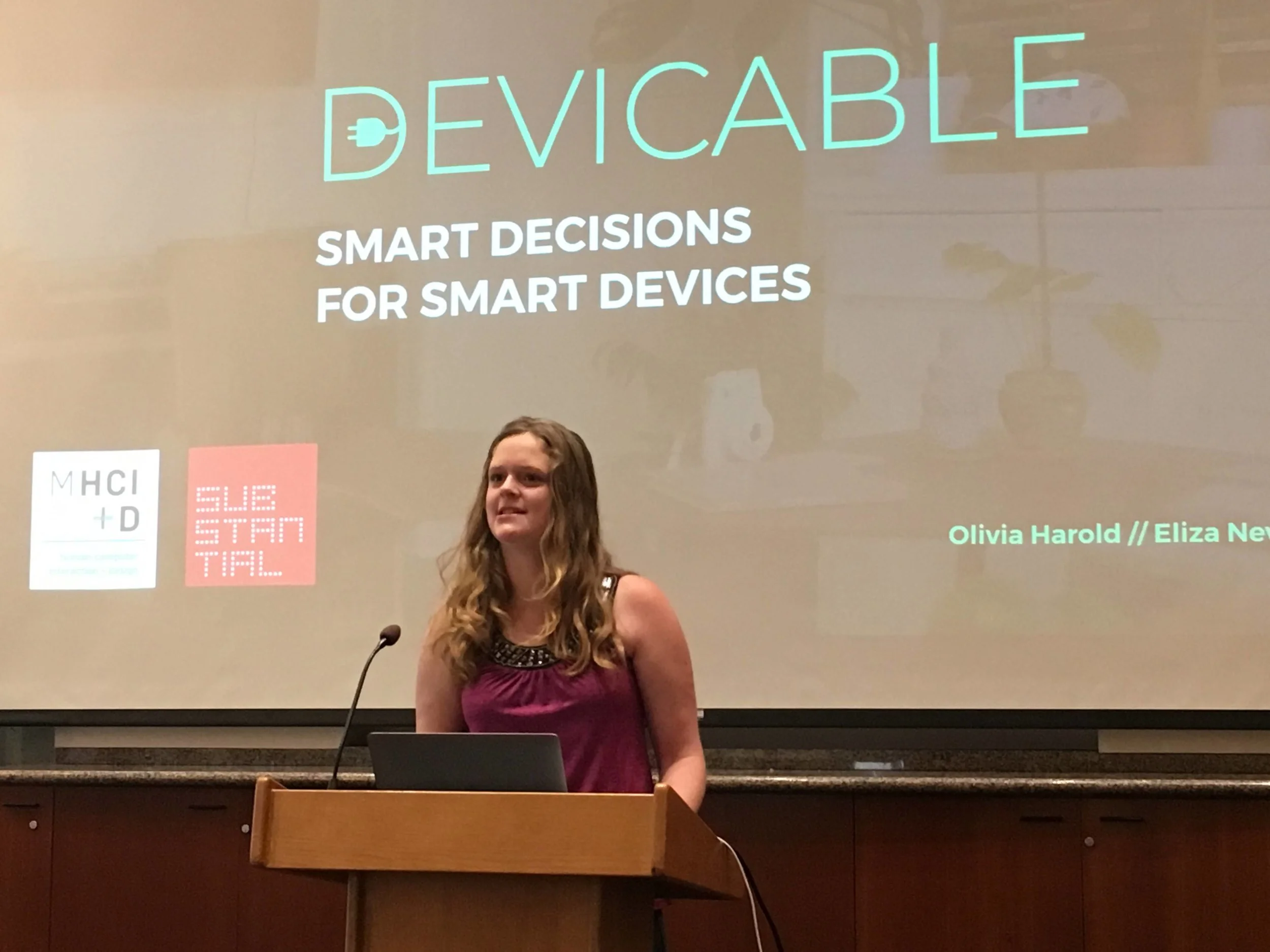

DEVICABLE

Smart Decisions for Smart Devices

Overview

Master’s Capstone project in which I conducted generative research about privacy and security in connected devices in the home, and design research on the resulting design solution, Devicable, a website where you can receive specific actions and instructions based on your concerns, making the tradeoffs more explicit.

My primary roles: Researcher, Project Management, Study Design, Visual System, Product Flows for Specification

Project Duration: 8 months (December 2017-August 2018)

Team: Olivia Harold, Eliza Newman-Saul

Deliverable: Research report, research presentation, solution presentation, UI specifications, product video, product poster.

How might we create a home IoT interface that answers users concerns about privacy and security?

Product Video and Philosophy

Throughout the design process, we identified key goals for empathetic design:

Support with evidence. We are here to help people make informed decisions, and to earn their trust.

Allow for differences in people, values and concern. Do not tell people what to do, but rather offer thoughtful options.

Make all information accessible without an account so users never feel pressured to share their personal information and data.

Key Features

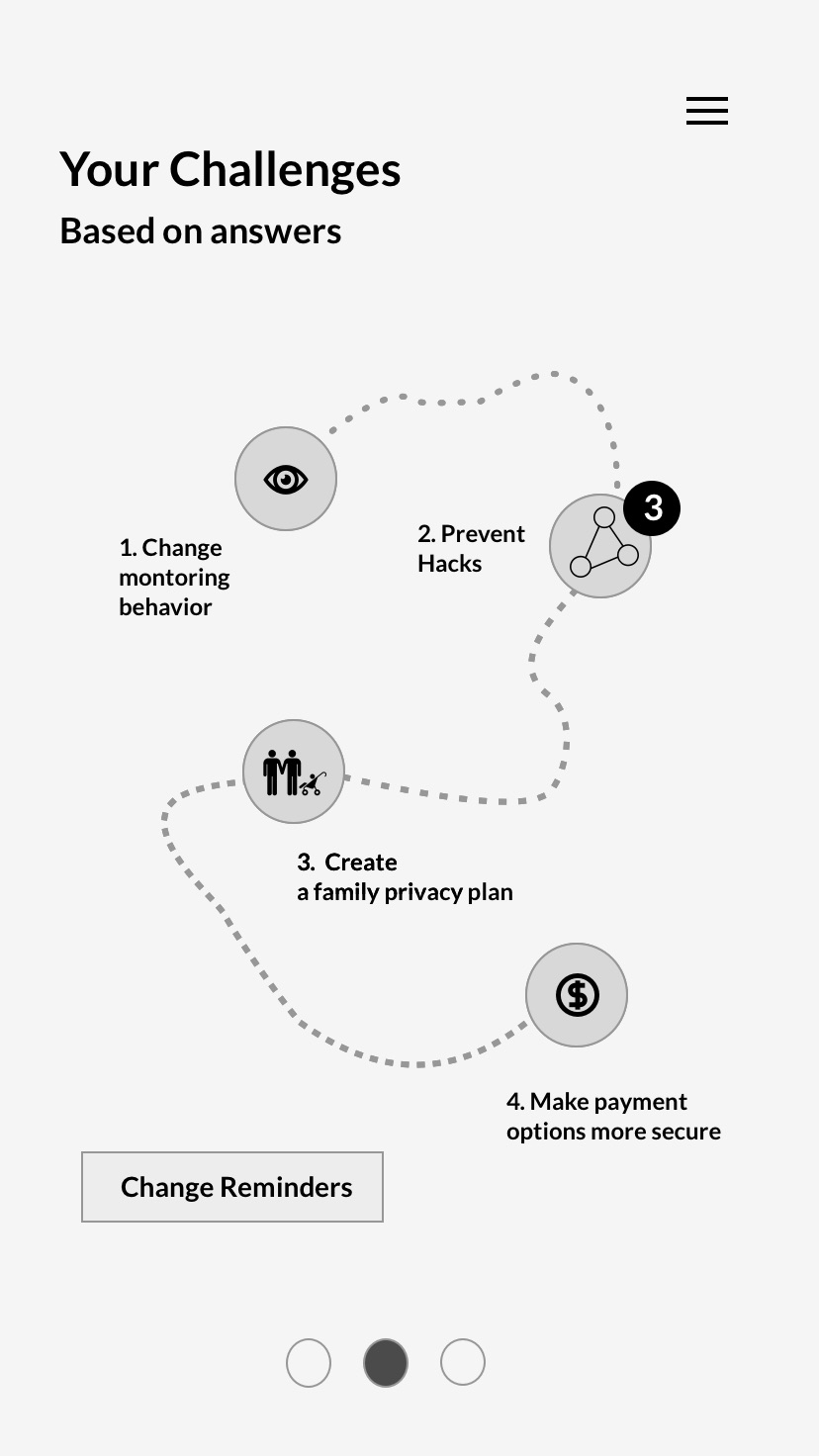

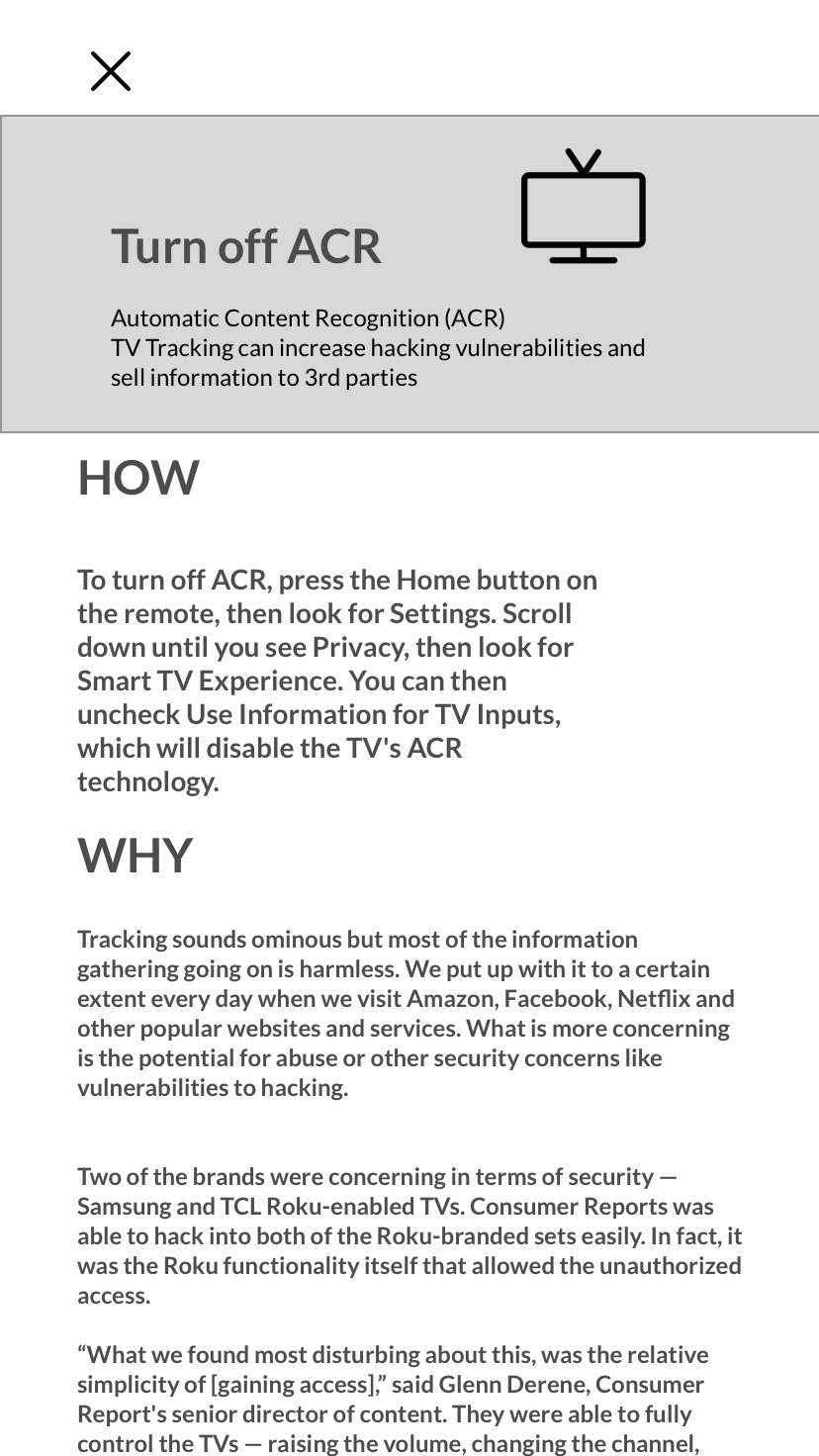

Privacy Manager

Receive actions based on devices and concern. Click through to an overview of each action, and then to detailed instructions and more information.

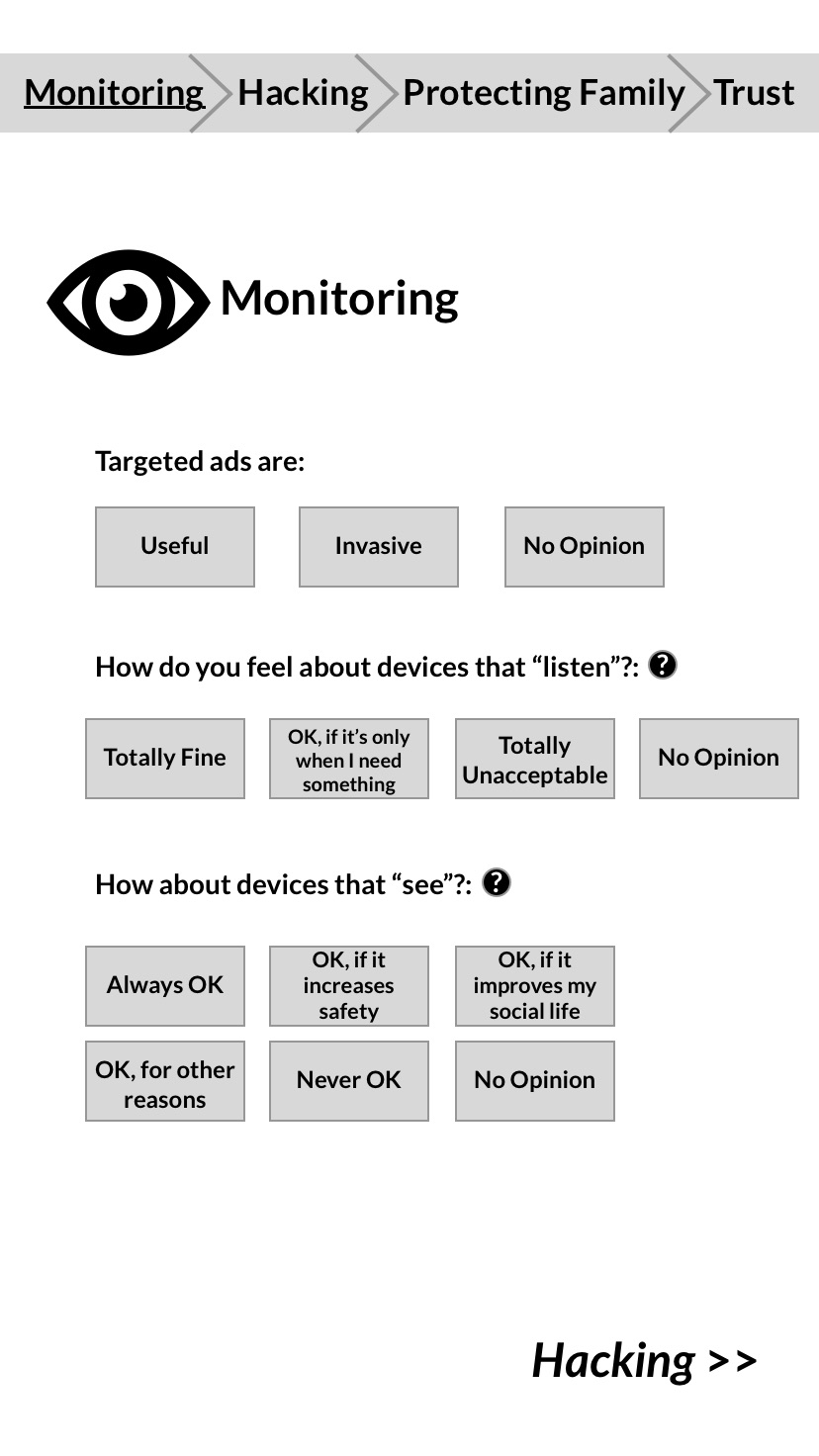

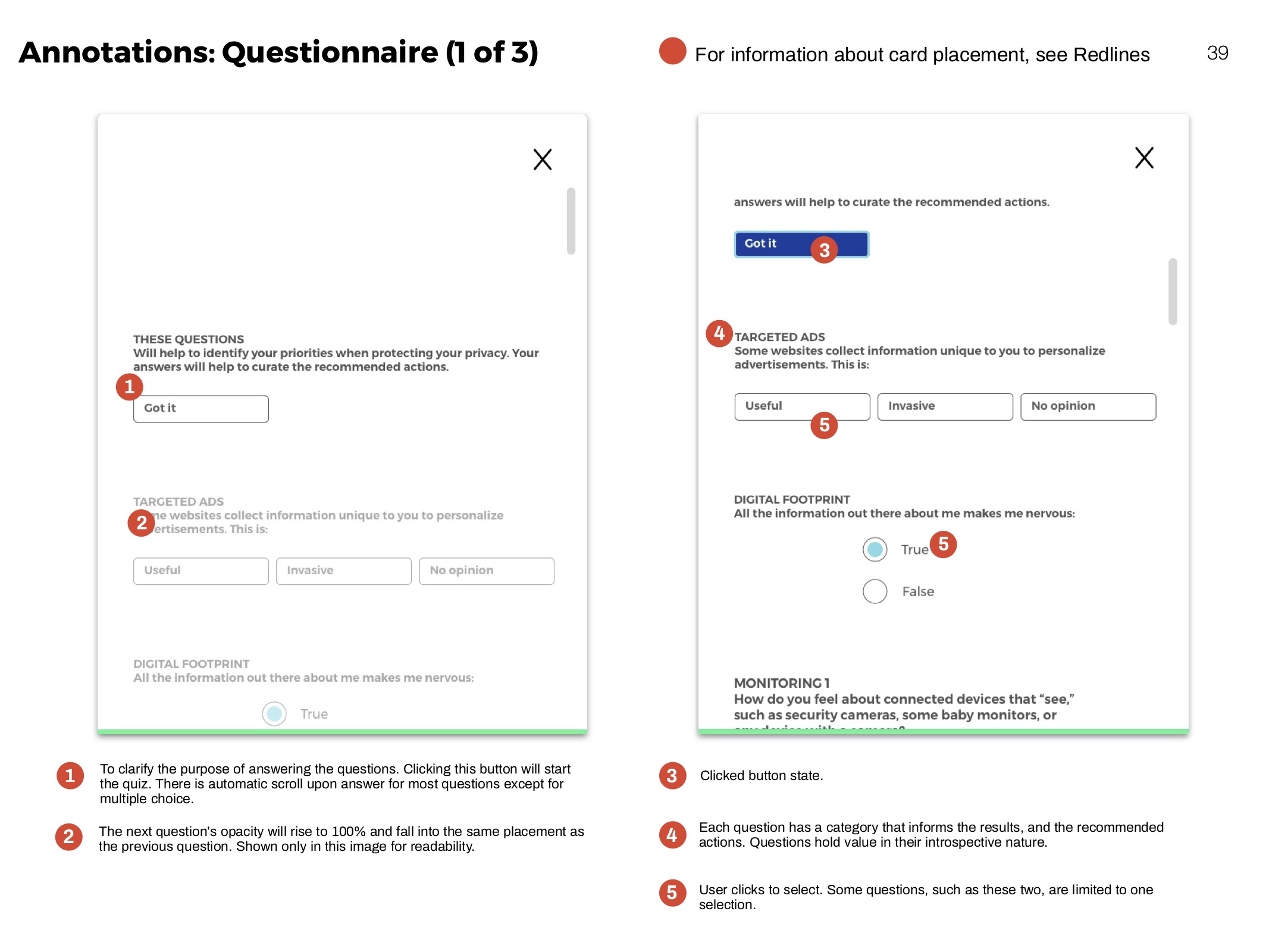

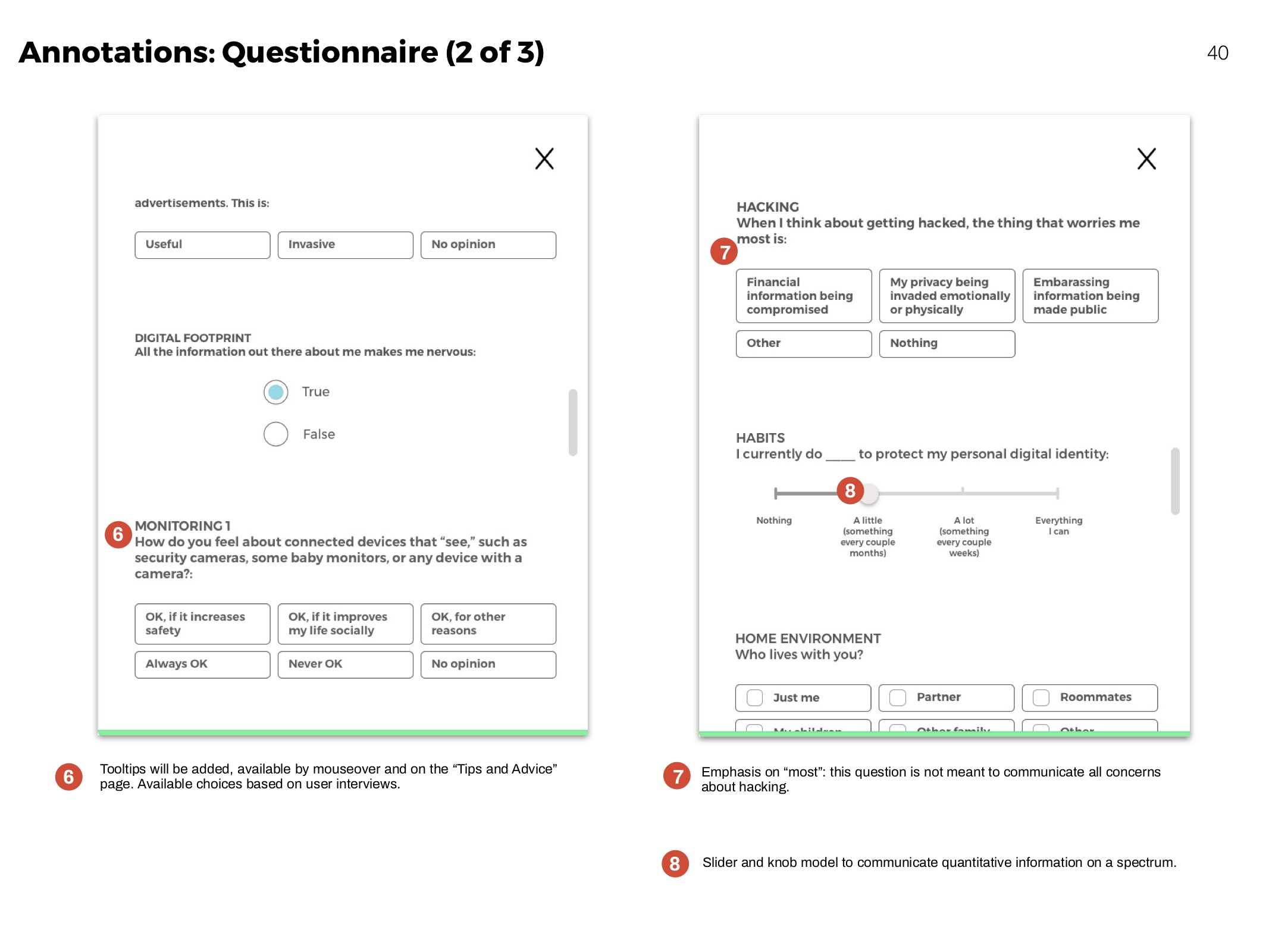

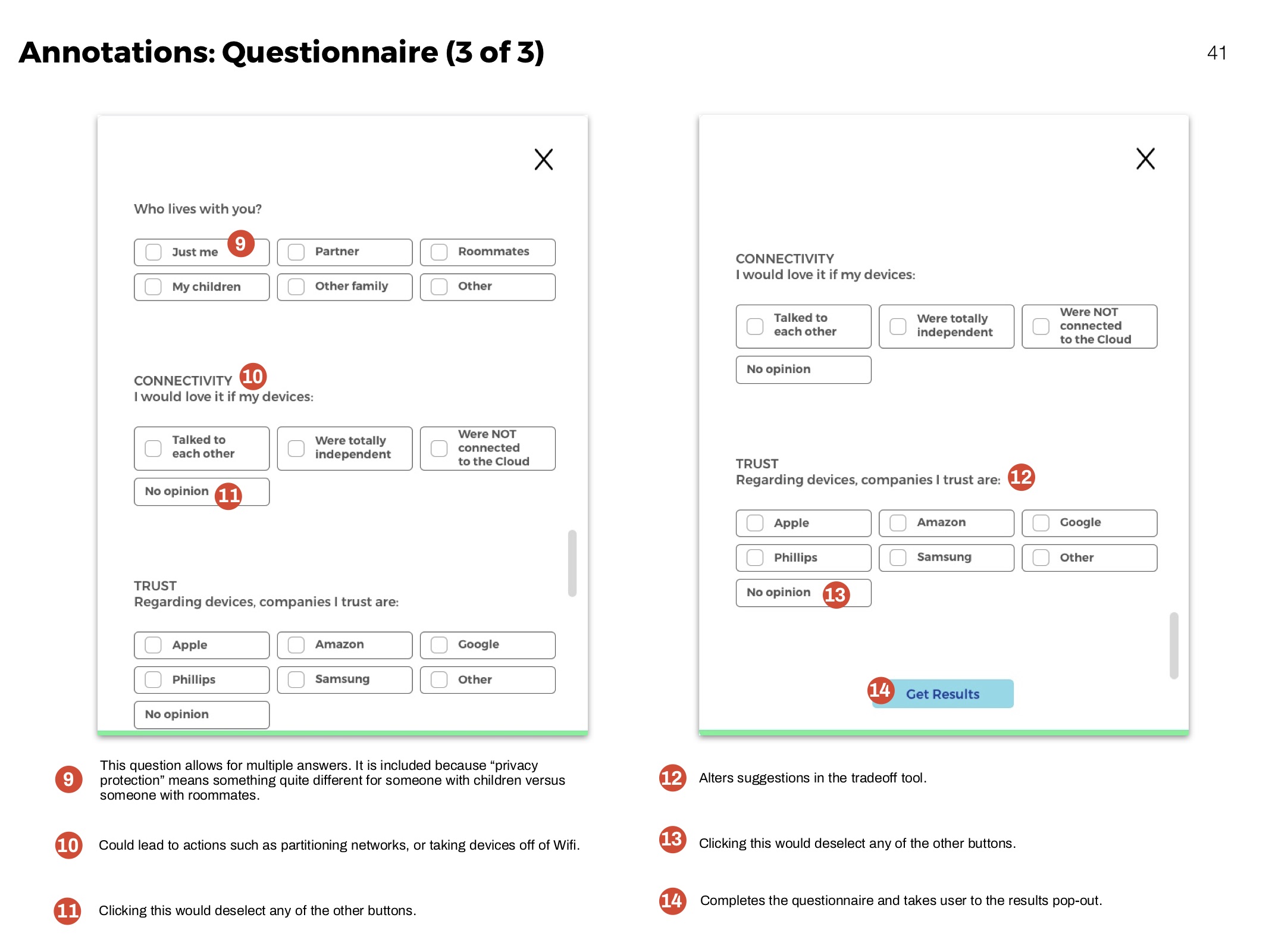

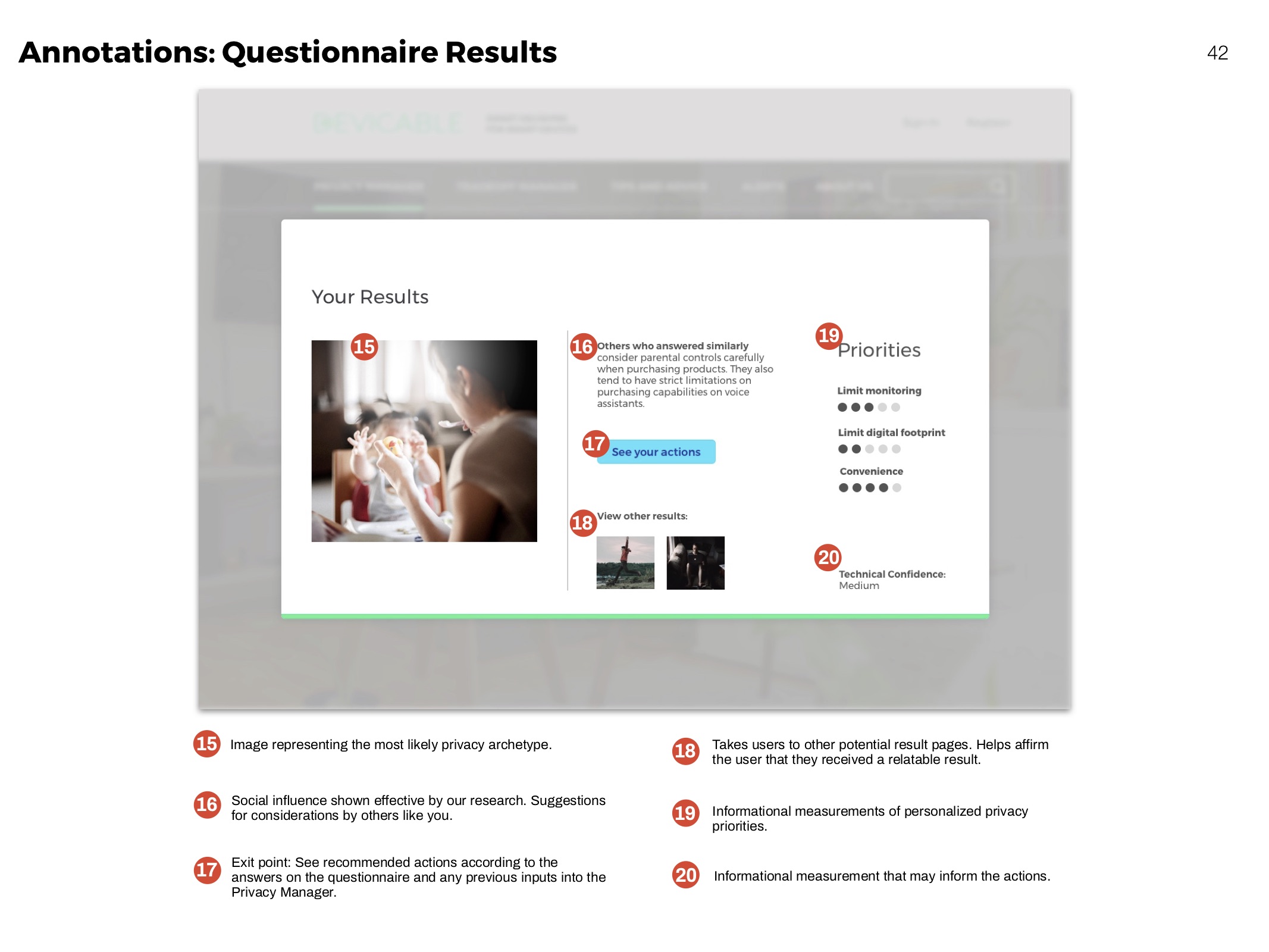

Questionnaire

The questionnaire helps people learn more about their personal concerns, and how they compare to others. It identifies and ranks privacy priorities.

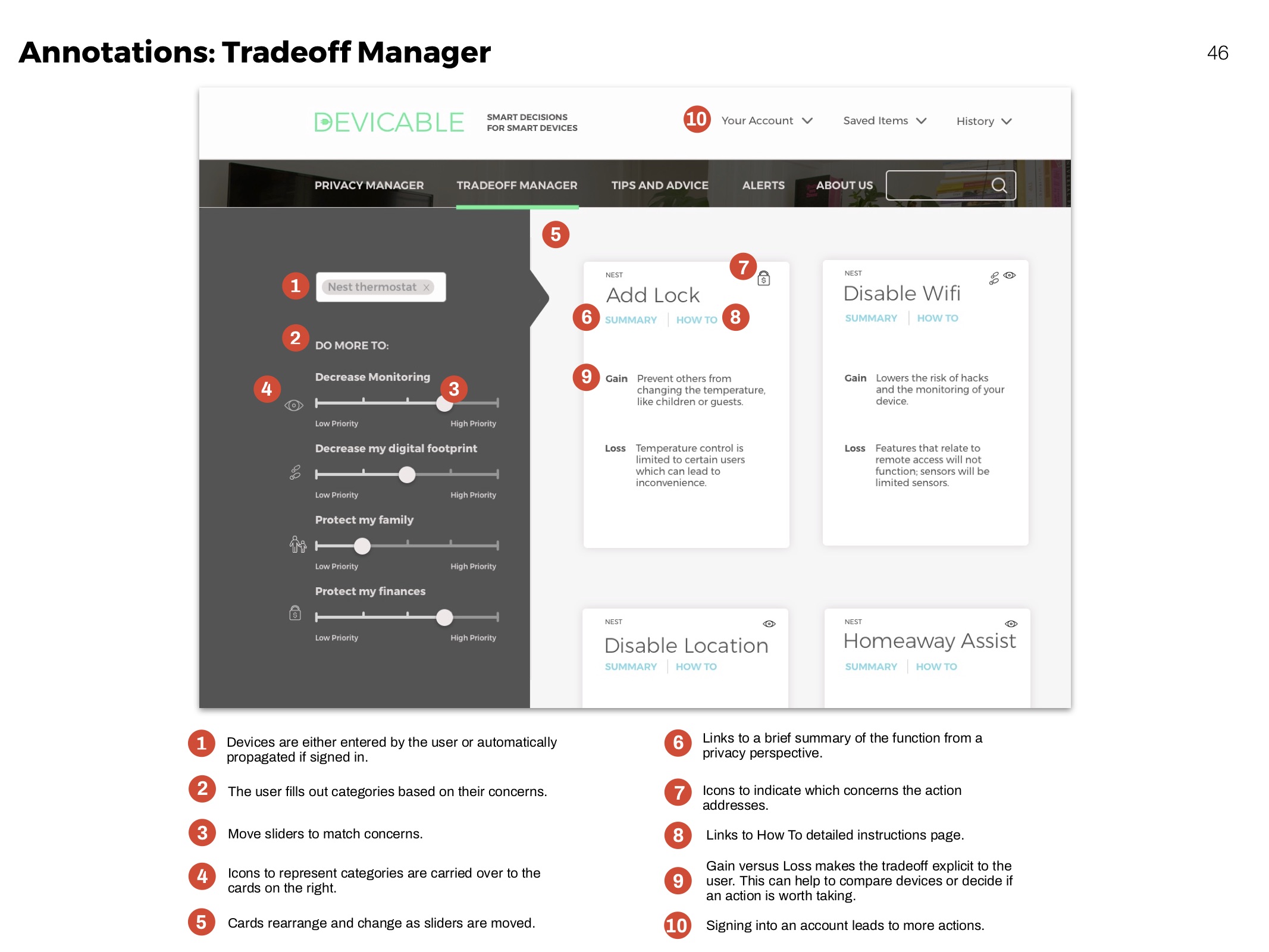

Tradeoff Manager

Predict how a devices functionality might change according to actions taken.

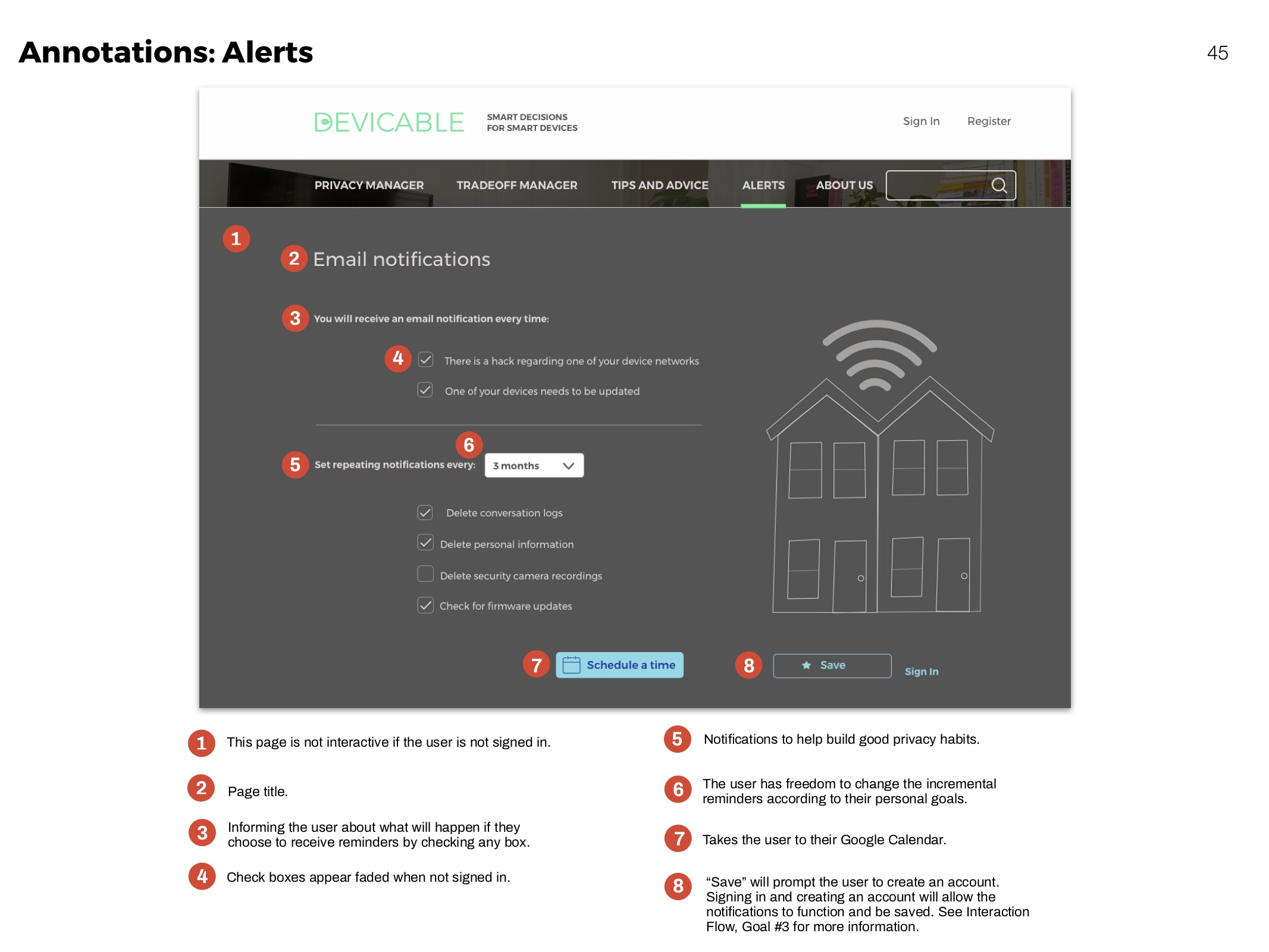

Reminders

To maintain safety habits and know when an important update is issued.

Research Structure: Overview

Formative Research

Secondary

8 expert interviews

30+ literature review papers

Primary:

12 participant sessions

628 responses to attitudinal survey

30 product competitive analysis

Design Research

3 phases of usability research on Low, Medium, and High fidelity prototypes.

Formative Research: Secondary

I needed to see what is already known regarding home IoT to build a foundation of knowledge and define the problem space. This included large-scale attitudinal surveys and publications as professional assessments of the situation. We conducted an ongoing literature review as well as expert interviews.

Literature Review

VALUE

IoT’s primary value is not in solving a technical challenge, but of improving experiences through convenience, control, customization, and automation. Cisco interviewed 3,000 consumers in the US and Canada and found “consumers believe these products and services deliver ‘significant value,” but they don’t understand or trust how the data they share with providers is managed or used.”

PRIVACY AND SECURITY

The largest technical challenge with implementing IoT systems is security. Unfortunately, the “Smart” devices present in homes are particularly susceptible to hacking (Arabo et. al, 2012). Security is further weakened without maintenance such as updating, making systems susceptible to malicious attacks.

DATA COLLECTION

Concerns about the collection of personal data are backed up by quantitative data such as surveys. One survey found that 68% of people were concerned about the exposure of personal data in a connected home environment (Fortinet, 2014). Another found that 85% of Internet users would want to know and control more of the data collected from their devices (TRUSTe, 2014).

Expert Interviews

Batya Friedman (Professor at the University of Washington and author of Informed Consent by Design), Gilad Rosner (founder of the Internet of Things Privacy Forum), Michelle Chang (UX Designer at Electronic Frontier Foundation), and Peter Bihr (Co-founder of ThingsCon and Mozilla Trustmark Fellow) (top row), and technical expertise (bottom).

We conducted interviews on academics and advocates. Advocates spoke of the need for transparency, both at the interface level and with data collection. I also gained insight into the players, like government, companies, and individuals, and that the onus of responsibility should not fall on the individual. We also learned about a trustmark currently being developed for IoT products.

“We live in an informational environment that’s only increasing in scope and scale, and to expect us to be able to manage it when the tools aren’t awesome to start is ridiculous.”

Competitive Analysis

I worked with my team to identify devices that centralize and control Smart Home devices through the lens of security. We also investigated Smart thermostats even though it would not be an area of redesign because understanding the successes provided valuable design insights. We decided the products and pursued knowledge of products based on personal interest, and research for all included reading websites, blogs, reviews, forums, watching videos by both users and manufacturers, and installing companion apps and browser-based products.

Routers

Voice Assistants

Physical privacy and security managers

Privacy and security browser extensions

Smart thermostats

FINDINGS

"Things” are merging together in both software and hardware.

The user experience is greatly diminished by poor feedback and reliability.

Security products are successful, but lack transparency.

DESIGN RECOMMENDATIONS

Ecosystems should incorporate many “Things” seamlessly, and account for multiple users.

Prioritize convenience without sacrificing control or transparency.

Formative Research: Primary

I identified research questions to better understand users of these products and identify points of intervention to alleviate their concerns. Directed questions shaped the design of participant sessions and filled in holes not answered by secondary research: How do people define privacy risks and does it match proven risks? How do people derive value form these devices? What do people understand about data collection and how does it inform their decisions? What are the complications of protecting digital identity?

Recruitment Flyer

WHO

We conducted sessions with 12 subjects (S1-S12; mean=40.5, s=13.57, 4 male, 1 MtF). Targeted participants to get a range of age and gender, as well as the following:

Potential home-buyers and minimum age of 27 years.

Own at least one Smart device (baby monitor, thermostat, light bulb, security camera, wireless caregiver, voice assistant, refrigerator, toy, television, plug).

Self-reported as concerned about privacy and security, but not to the point of paranoid (2, 3, or 4 on the scale of 1 to 5).

WHERE

Participant homes (2; Skype (also from home) (4); Participant workplace (1); MHCI+D studio (5). Interviewing in home when possible preserved some fidelity of self-report.

WHAT

Study activities (conducted on Subject#, changed as saturation was reached)

01. Spectrum card sort (S1-S9)

02. Matrix card sort (All)

03. Semi-structured interview (All)

04. Privacy policy think aloud (S1-S4)

05. Design activity (S9-S12)

Spectrum card sort

Synthesis

We coded attitudinal verbalizations from each audio and video session.

Because of the large quantity of data we collected, I decided to exclude behavioral information.

Perceived Value and Risk of Smart Devices

I used each card sort artifact to sort devices by participant as Low, Medium, and High in Excel. I aggregated the ratings and created the following charts which highlighted interesting differences between value and risk judgements.

VALUE

Participants assigned their own definitions of value, which differed both according to person, and even across devices within the same participant: convenience, financial savings, environmental monitoring, home automation, entertainment, comfort, home modification, health monitoring, connection management, and accessibility.

RISK

Participants assigned their own definitions of risk. Definitions differed both according to person, and even across devices within the same participant: spying potential, reliance, reliability, multiple entities having access, device always on and the brands security reputation.

The Card Sort activity confirmed that people have conflicting information and options about the risk of Smart devices, informing the design solution that makes the information clear and verifiable.

“We give up privacy for convenience, but that doesn’t mean we shouldn’t be cautious.”

CONCERNS: SURVEY RESULTS

The survey was meant to play a supplemental role to attitudes I explored with participant sessions. Most responses were from reddit.com/r/homeautomation and reddit.com/r/googlehome. I used Google Forms to collect responses and exported to CSV for analysis. I outsourced the data for statistical analysis, but ultimately decided that the questions were not controlled enough to draw convincing conclusions to explain significance. Instead, I combed through the data and sorted responses into broad fitting categories.

Users had the chance to explain their answers.

17% of Yes answers included a conditional (e.g.: Devices are secure if you know how to use them properly). Not with cloud connectivity: anything connected to the cloud is not secure. There were instances of users who set up entire offline home automation networks to avoid the cloud.

In this population that is heavily biased towards home connected devices, the majority still expressed concerns about privacy and data collection.

Abstracting to Personas

I sorted each subject into two spectrums based on themes that arose during the interview phase: amount of technical command, and amount of concern. The hashed area contains our users we will design for. Some users were omitted because their concern was so high that they avoided connected devices, or because their technical expertise made them self-sufficient at protecting their privacy.

I identified themes from user interviews and abstracted to craft 4 personas: 2 target users and their anti-personas. I designed anti-personas because knowing why people are untroubled also informs our design, but convincing users to be concerned is outside the scope of our design solution.

Primary Personas

(Click to enlarge)

Negative Personas

Insights

01. SOCIAL AND NEWS EVENTS MAKE TECHNOLOGY UNDERSTANDABLE AND MOTIVATE BEHAVIOR CHANGE.

“Turns out Alexa is storing audio info...my jaw dropped, wait what?” (P2)

“I subscribed to a VPN, but sometimes I forget to pay and it runs out, then something happens that reminds me of why I wanted it in the first place.” (P3)

We saw that many people took action and changed behavior after a news event, or after conversations with friends. We hypothesize that part of the explanation is that mainstream media, as well as peers, explain complicated concepts in understandable terms rather than technical language. Furthermore, sources outside of the device companies provide less biased information.

02. INDIVIDUALS PERCEIVE PRIVACY MANAGEMENT AND MAINTENANCE AS CONFUSING AND TIME-CONSUMING.

“I don’t have time to maintain or update... It shouldn’t be on the individual to be constantly defending themselves.” (P3)

“Companies provide the tools and information, but what user controls anything with that level of granularity?” (SME)

The Troubled Casual technology users expressed a desire to understand. Even if information about privacy management is easy to access, people see it as time- consuming. One person came to us because she was concerned after reading her Alexa voice logs, but mentioned that accessing the records was easier than she expected. When asked to read a privacy policy, participants could understand and piece together the information, and recognized when the language was purposefully vague or jargony. However, they became bored quickly. A boring task, although easy, will contribute to a higher perceived workload and lower sustainability of a behavior.

03. WE LIVE WITH BEING HARVESTED FOR THE BENEFIT OF CONNECTION AND CONVENIENCE.

“I had to sign a bunch of agreements that I wasn’t fond of.” (P8)

“We give up privacy for convenience, doesn’t mean we shouldn’t be cautious.” (P2)

Data collection is troubling, but not enough to give up services and benefits. For example, the social connection provided by Smart phones, or convenient way-finding provided by location-tracking. An avid runner said that the biometric data he was receiving from his Garmin watch was more useful to him than his data was to Garmin.

04. IOT TRENDS TOWARDS OPAQUE INTERFACES.

“I applaud people who go to extremes to research devices and what they can do.” (P9)

“As I become more informed I become more suspicious.” (P2)

Devices are geared towards completing tasks and minimum interactivity, and are not informative about themselves. There is a mismatch between the need for easy troubleshooting and building trust with users through transparency, and opaque design trends.

Design Principles

01. Lower the mental load.

02. Prioritize convenience.

03. Make interfaces transparent and understandable.

04. Think long-term.

05. Avoid fear-based solutions.

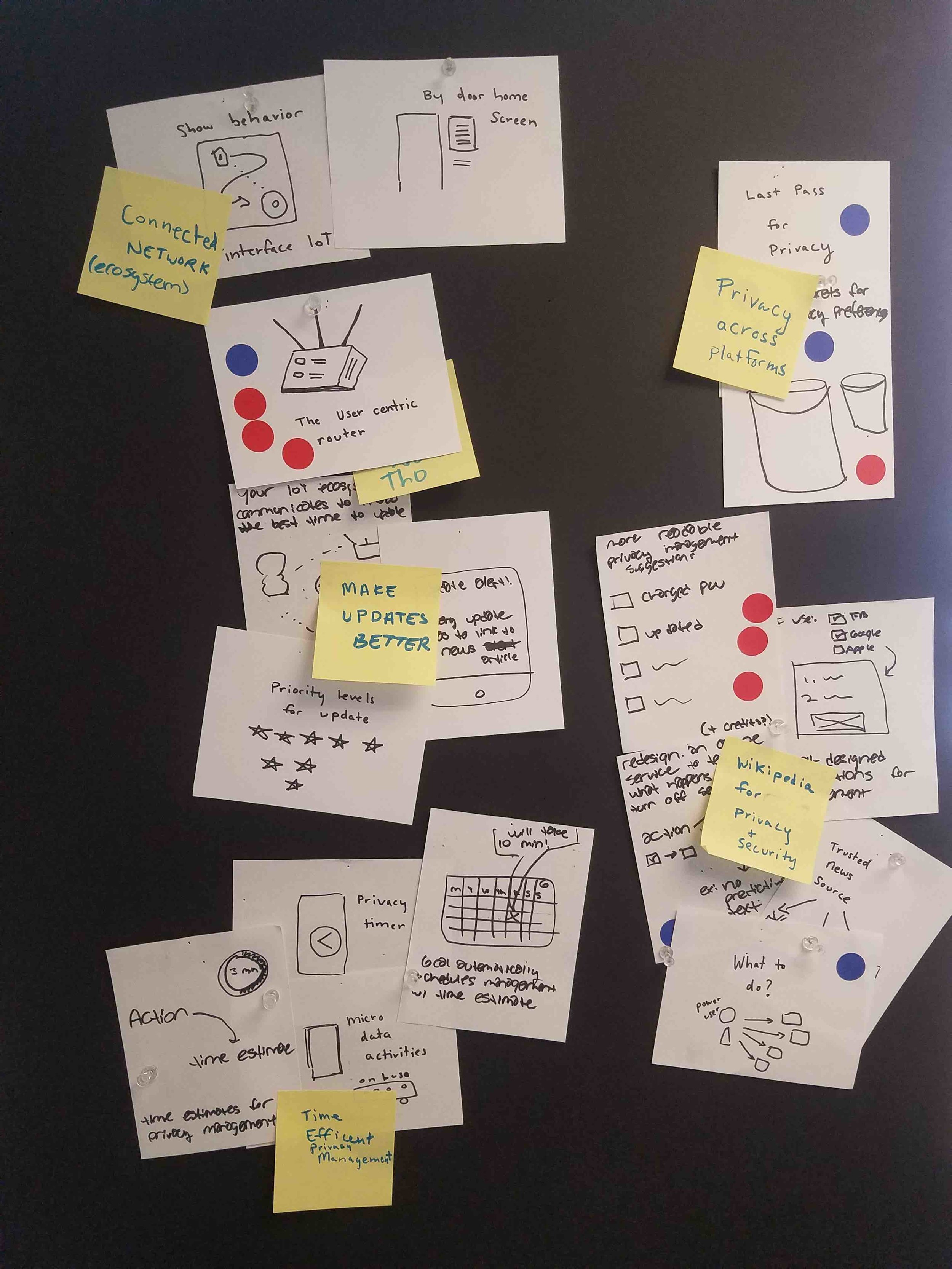

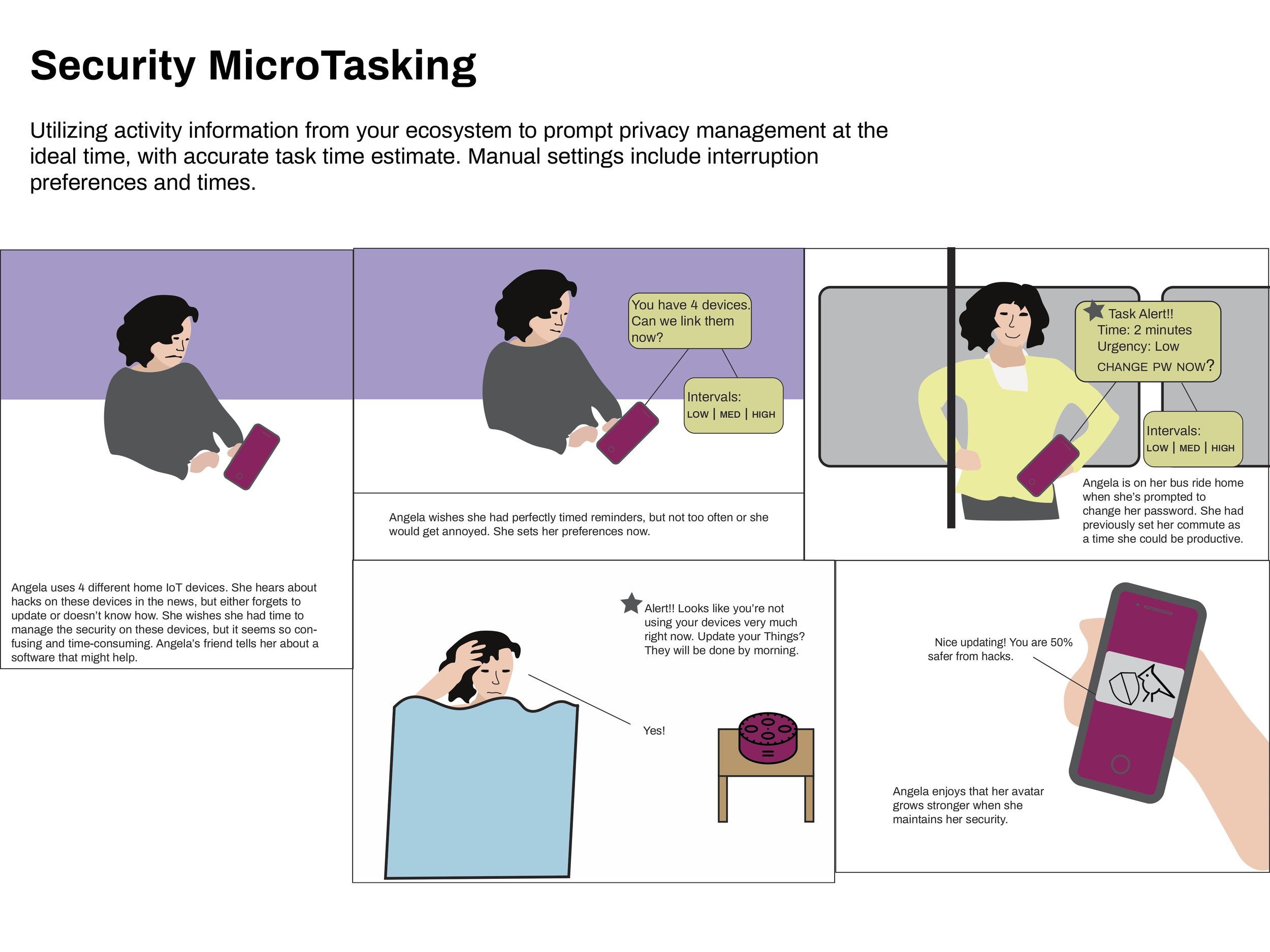

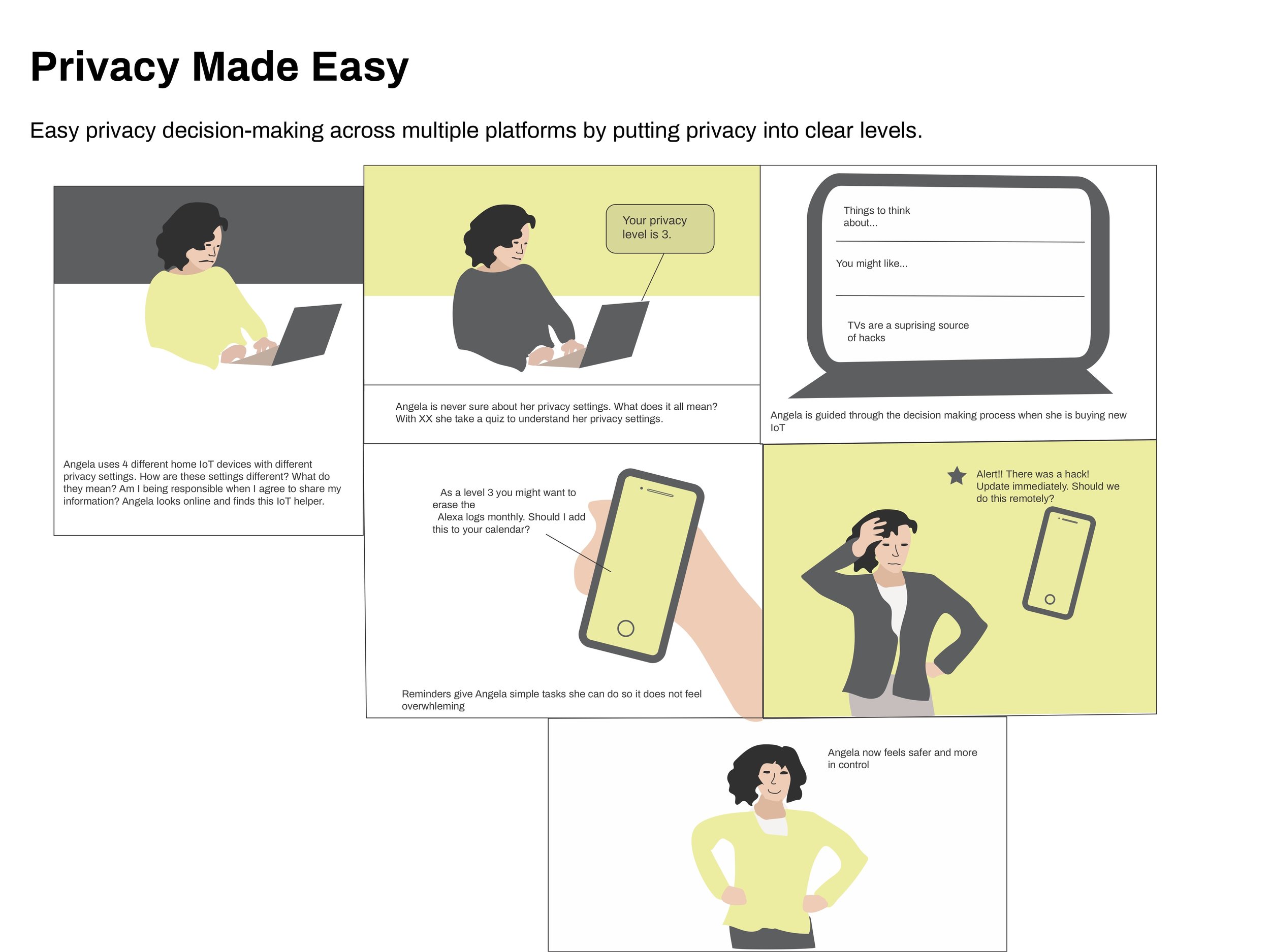

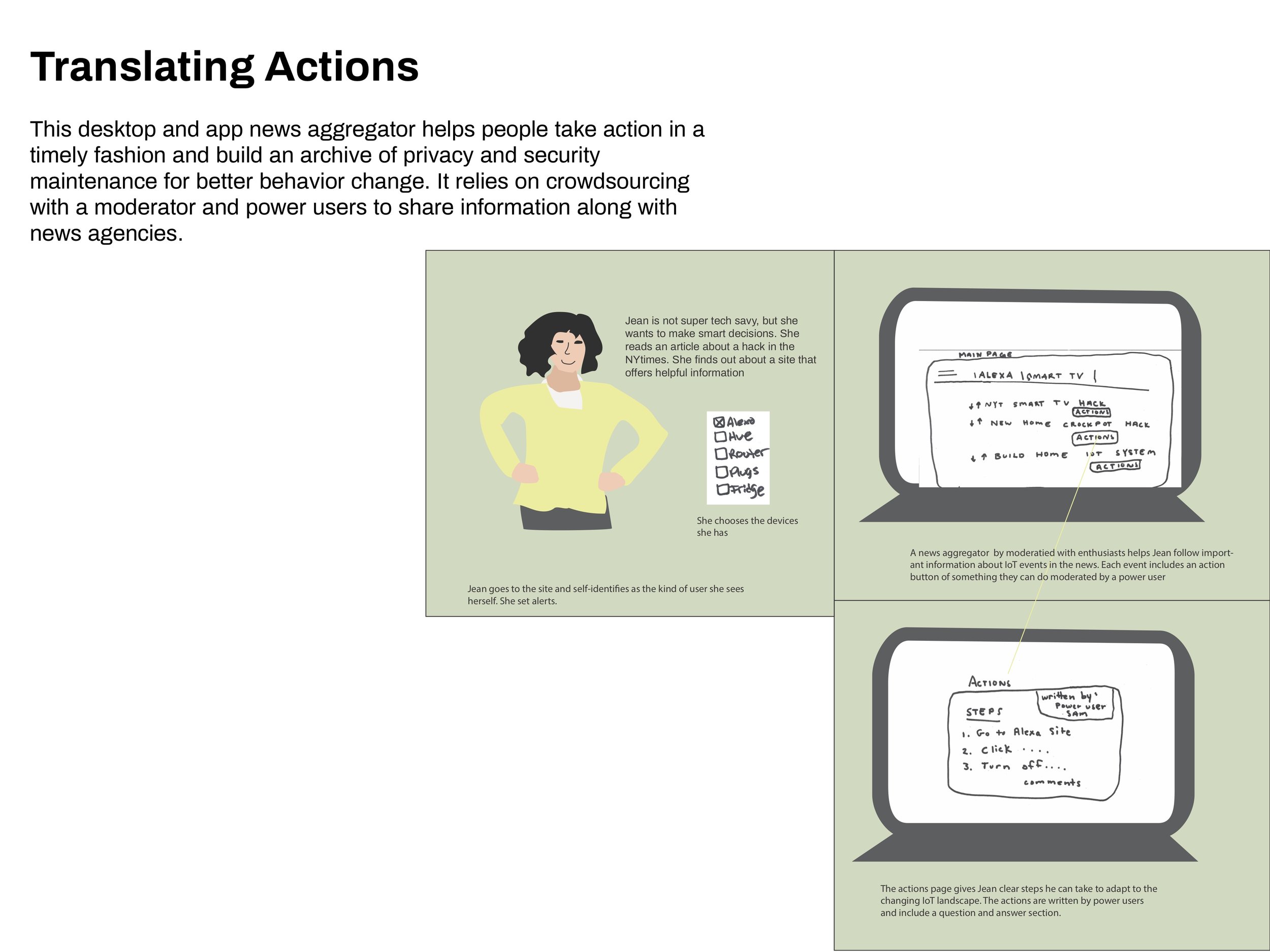

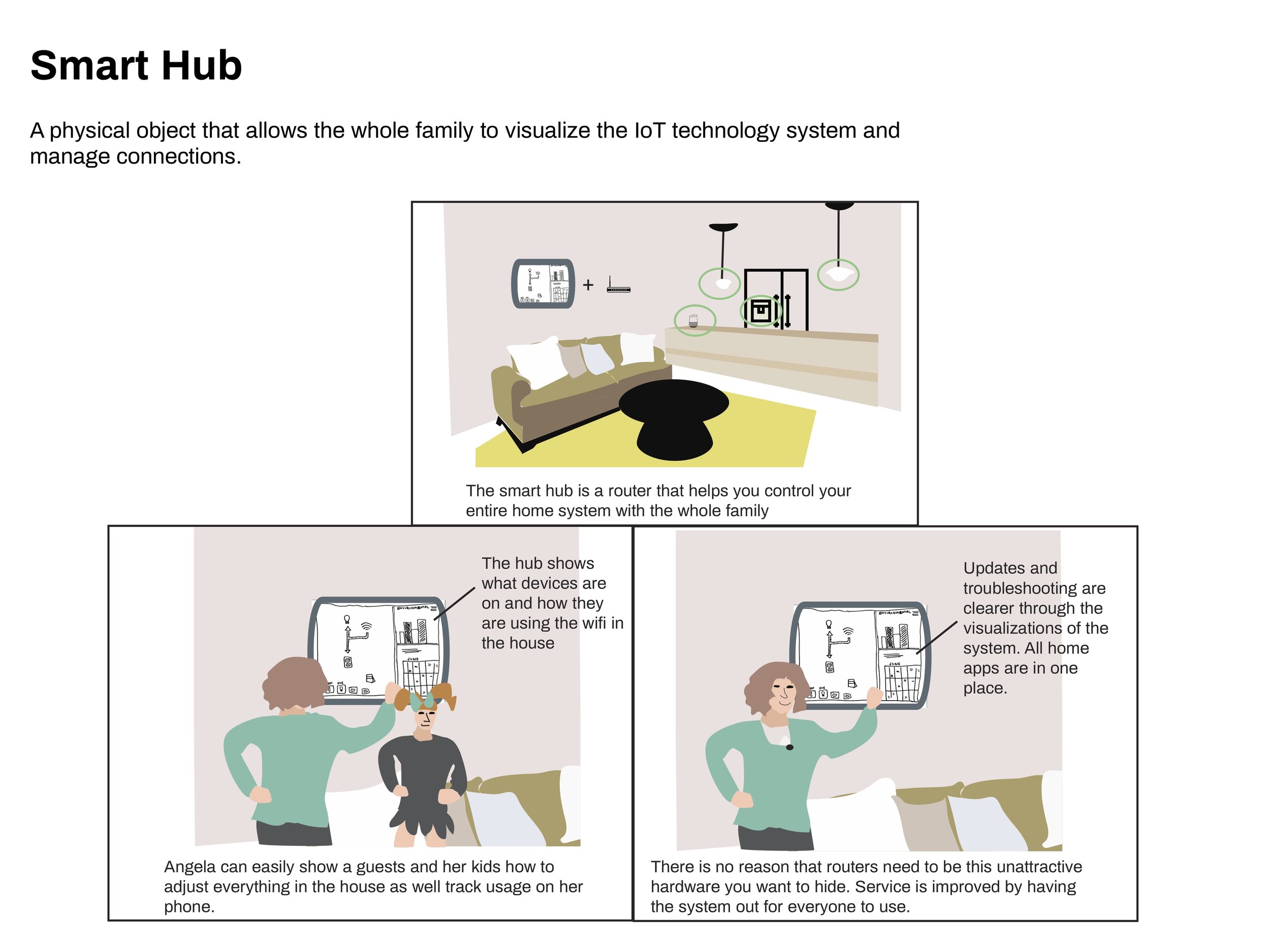

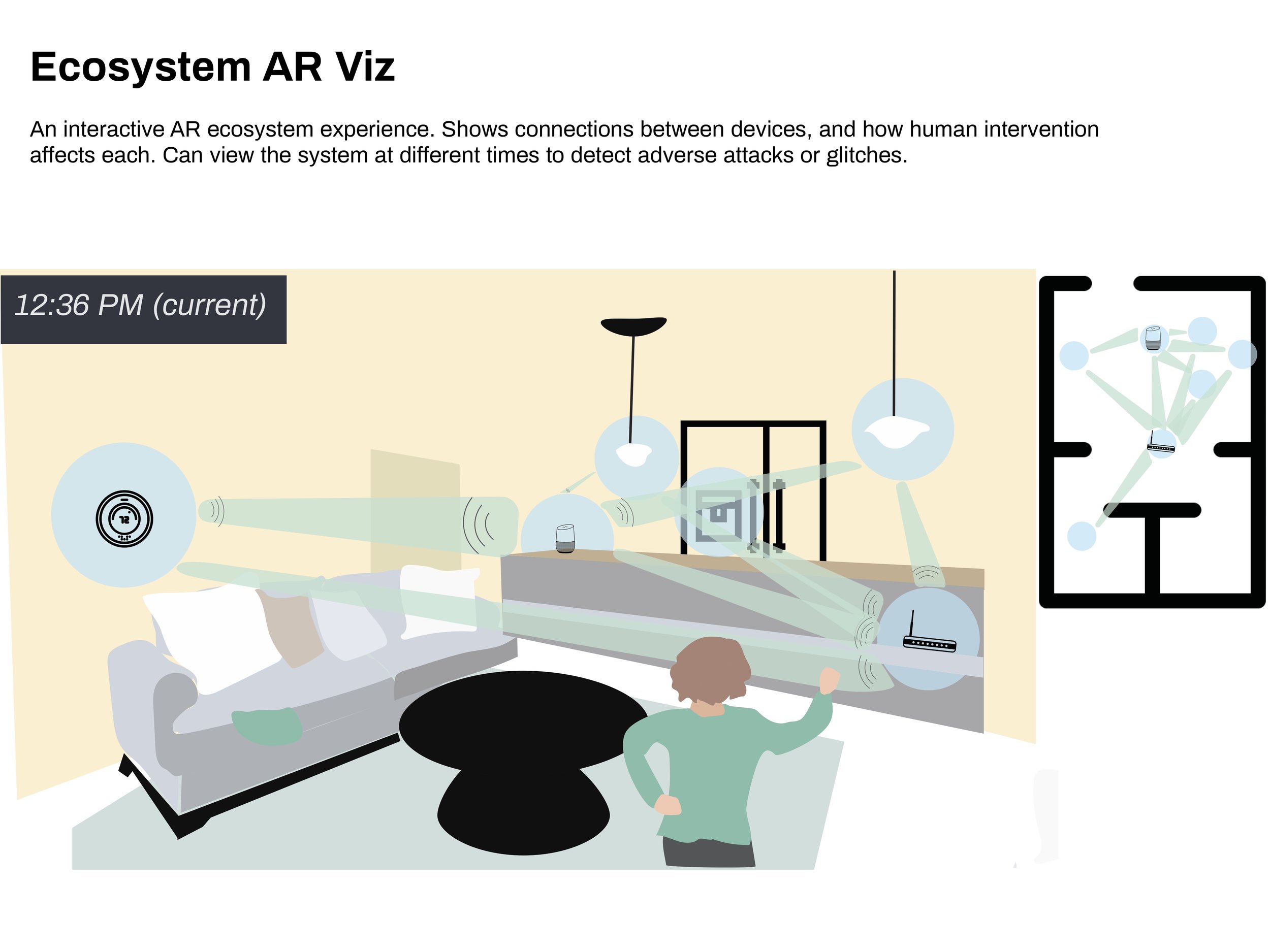

Generating Solutions

Employing a braiding technique keeping insights and design principles in mind (5 minute sketching sprints, meet and discuss, rinse and repeat), we came up with 70 concepts.

A pin-up led us to downselect and combine concepts. The result was 5 broad concepts meant to promote transparency and enhance user control for both techie and casual users.

Usability Testing

I defined clear success metrics before each phase and created note-taking tables to execute organized sessions. To absorb and synthesize the sessions, I combined notes and highlighted themes and interesting comments. For each phase, I tested on 5 participants.

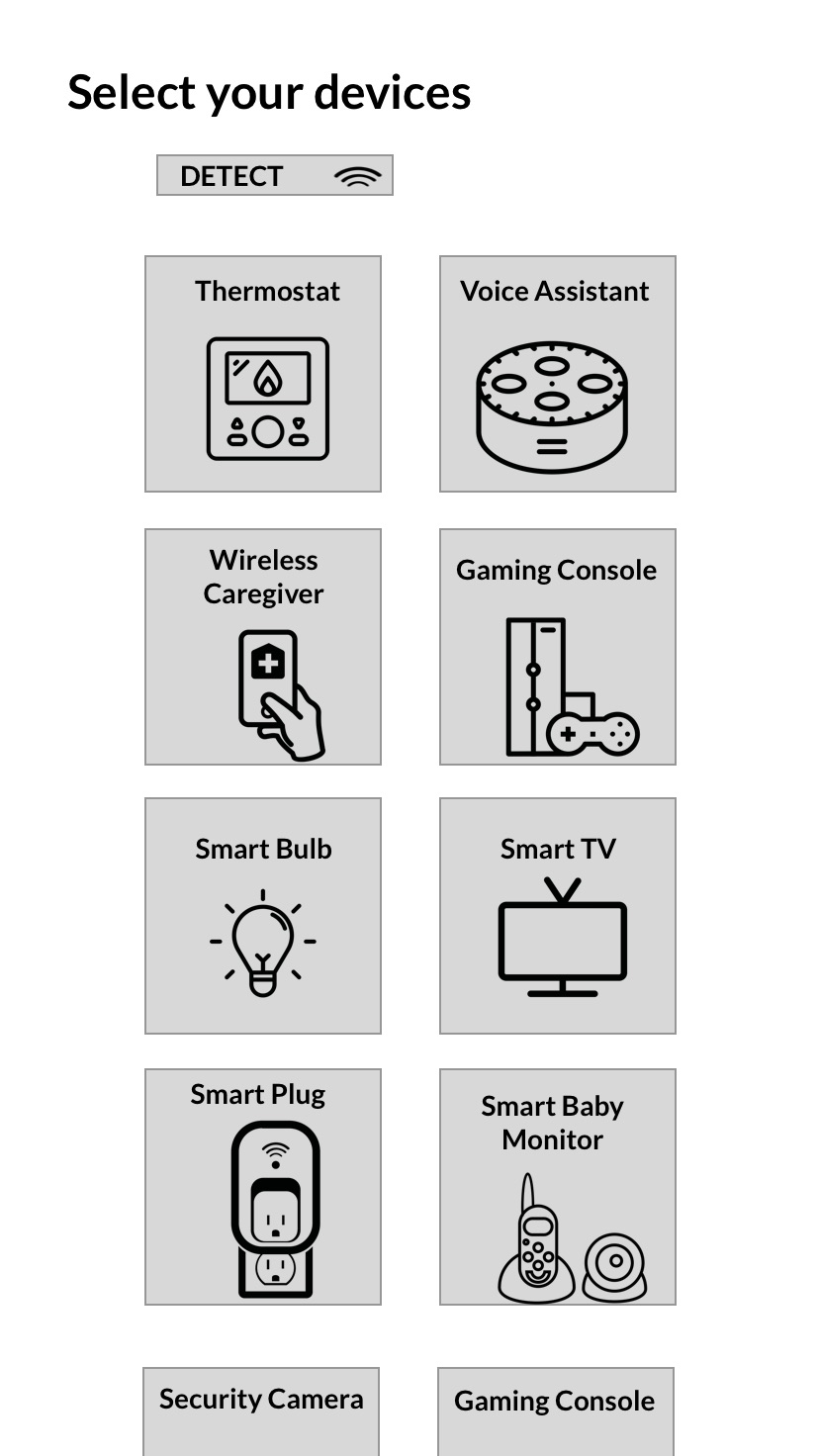

Low Fidelity Testing: Measure Desirability

I presented sketches to participants as means to test desirability, as well as the effectiveness of different types of system visualization, likeliness of improving data management, and variation in privacy preferences in a concerned population.

Each participant had unique opinions about each concept. However, the quiz feature received positive feedback from all participants, so I made it a requirement of the final product. Other requirements included providing information across devices and privacy preferences, and supplying clear actions.

“It’s consumer-friendly to make something actionable.”

“The quiz could be very powerful.”

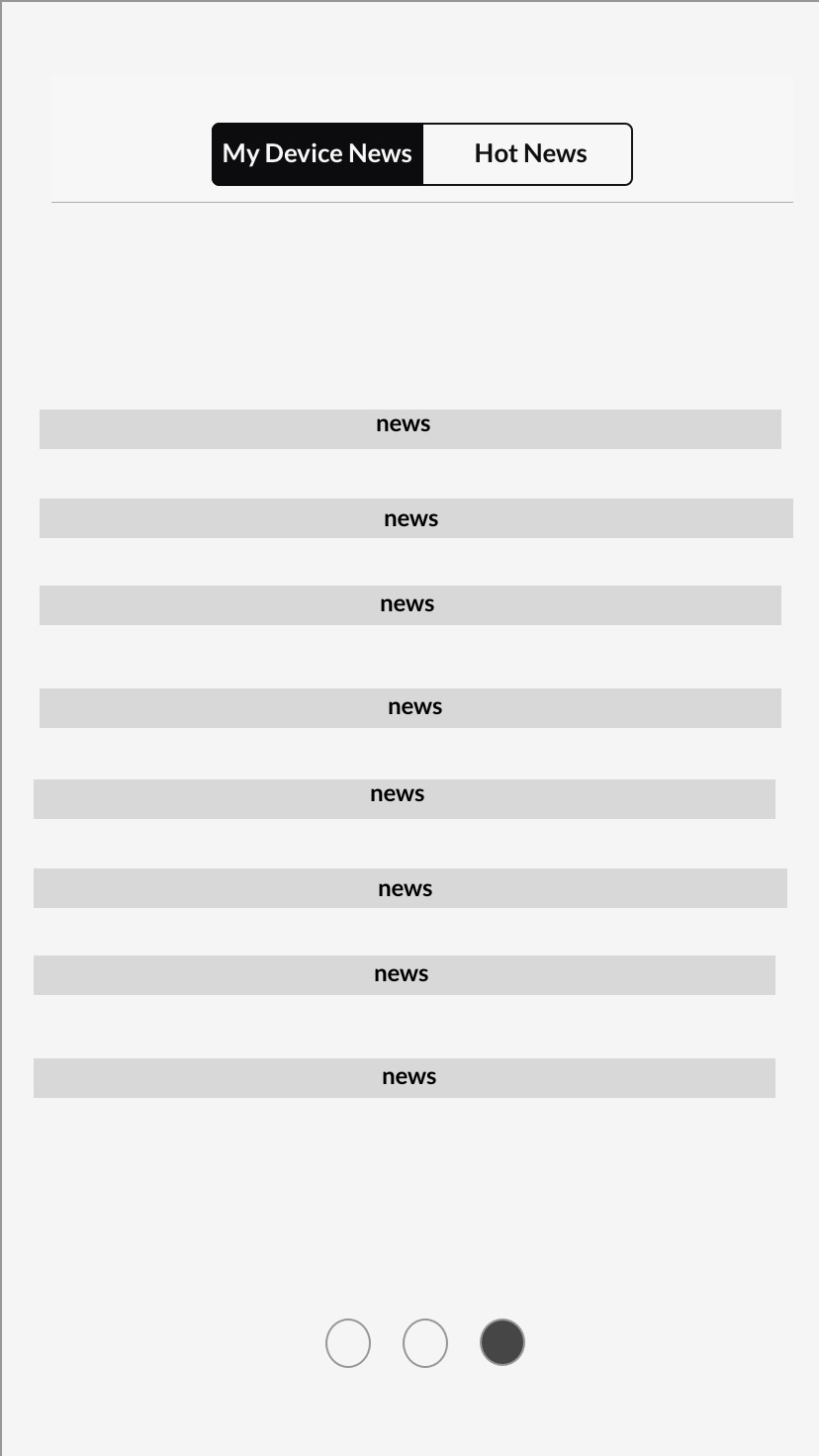

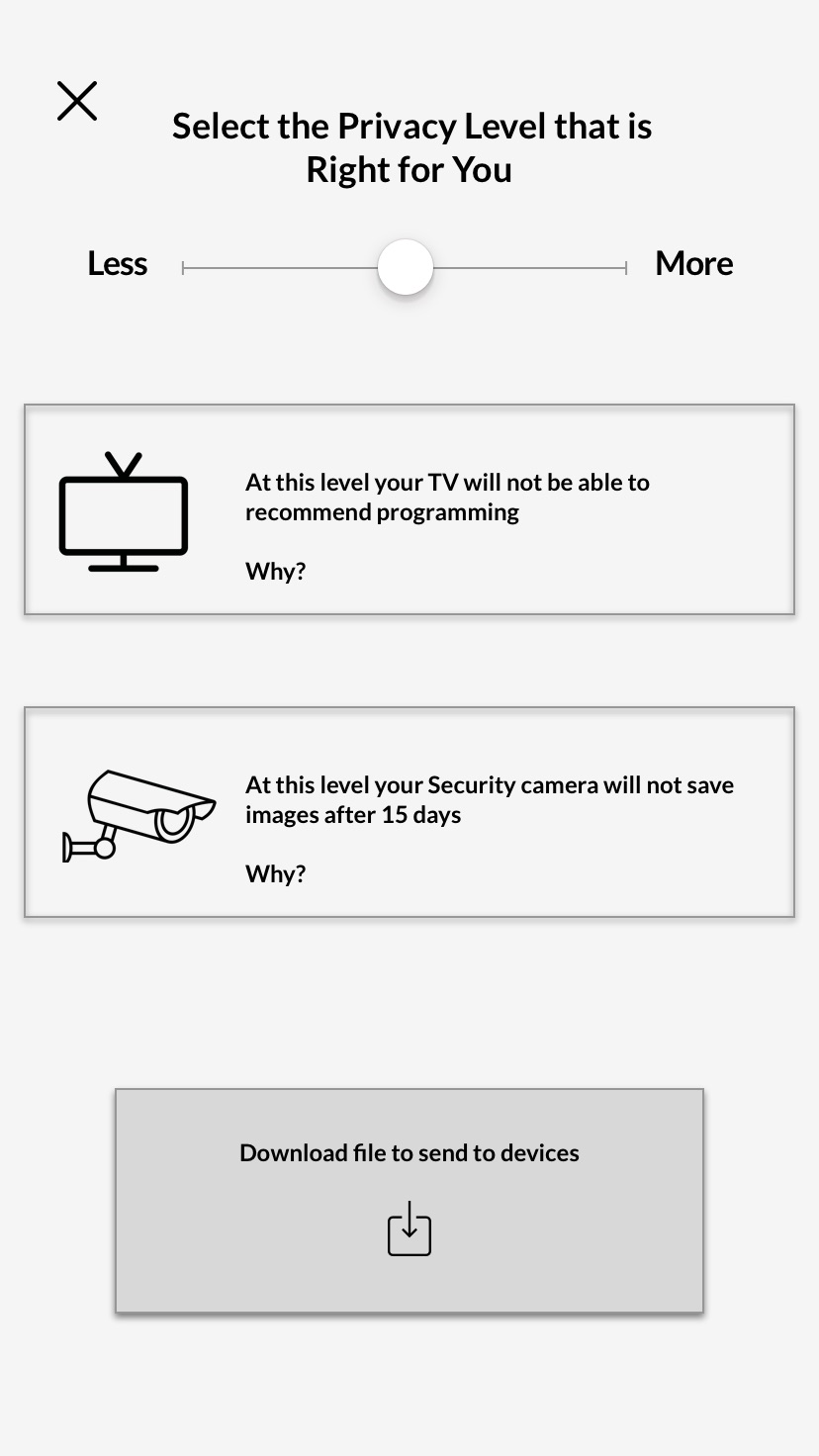

Medium Fidelity Testing: Identify Feature Flow

Together with my team, we combined features into a mobile phone app with questionnaire, news, and actions features. Using simple interfaces and InVision and a Card Sort, I tested the flow of the app and answered questions about trust, form factor, questionnaire questions, and a reminders feature.

Challenges: People did not think in categories such as “change monitoring behavior,” but rather by device, meaning the solution should provide device-by-device control. Viewing the tradeoffs was highly desirable in most participants.

System Visualization: The “hub” feature was abandoned because it was confusing to casual users, but not functional enough for techie users.

News Feed: Test participants liked how the news legitimized our information, but said they would not scroll through the feed. I decided to incorporate links into the actions, rather than on a separate page.

System Architecture: Splitting up information was confusing. Furthermore, participants said they would rather complete all the challenges in an afternoon than return to the app over time. I decided to adopt a “dashboard” model and change the form factor to a website rather than an app.

“I want to see not just how to restrict my info, but in what areas the risk is outweighing the payoff.”

“I would still want a way to do it individually. If it was able to handle every device all at once, most people would do that, but for me I would nerd out and do it device-by-device.”

High Fidelity Testing: Refinement

CONFIRMING FEASIBILITY

I needed to see for myself that informed people could take back control. I researched products using company websites, reviews, and tech blogs to find up-to-date actions on a number of devices and brands, and how taking those actions would change the product. I then combined the information and identified themes, such as time of intervention (many settings are decided at the point of set-up), and length of intervention (for example, temporarily turning off location services).

USABILITY TESTING

I tested by linking screens together with Sketch’s prototyping tool and conducting a guided clickthrough with participants. I queried about expectations before clicks, and reactions afterwards. I observed usability and desirability.

Older version of screen, used in usability testing.

Observation 1: The detailed directions could be more direct.

Impact on design: Interactive changes to the picture as the user moves through the steps, with cursor highlights and up-to-date screenshots.

Observation 2: People desire exploration, and the system supports it.

Impact on design: Make sure people know the service is free and you don’t need an account to explore.

“It’s encouraging me to play with it and vary it, which is what I was hoping for.”

Older version of screen, used in usability testing.

Observation 3: Actions are useful and the purpose is understandable, but information should be more immediately available.

Impact on design: Added a search box that goes directly to actions, news articles, and best practices.

Specifications and Results

I created models and visualizations to fully document our design response and the structure of Devicable, with the same level of detail as a document that would be passed on to engineers or technical teams in a company setting. Aesthetic and functional details were decided based on research: the user needs and impressions we took away from our many conversations, and how other decision-making websites function.

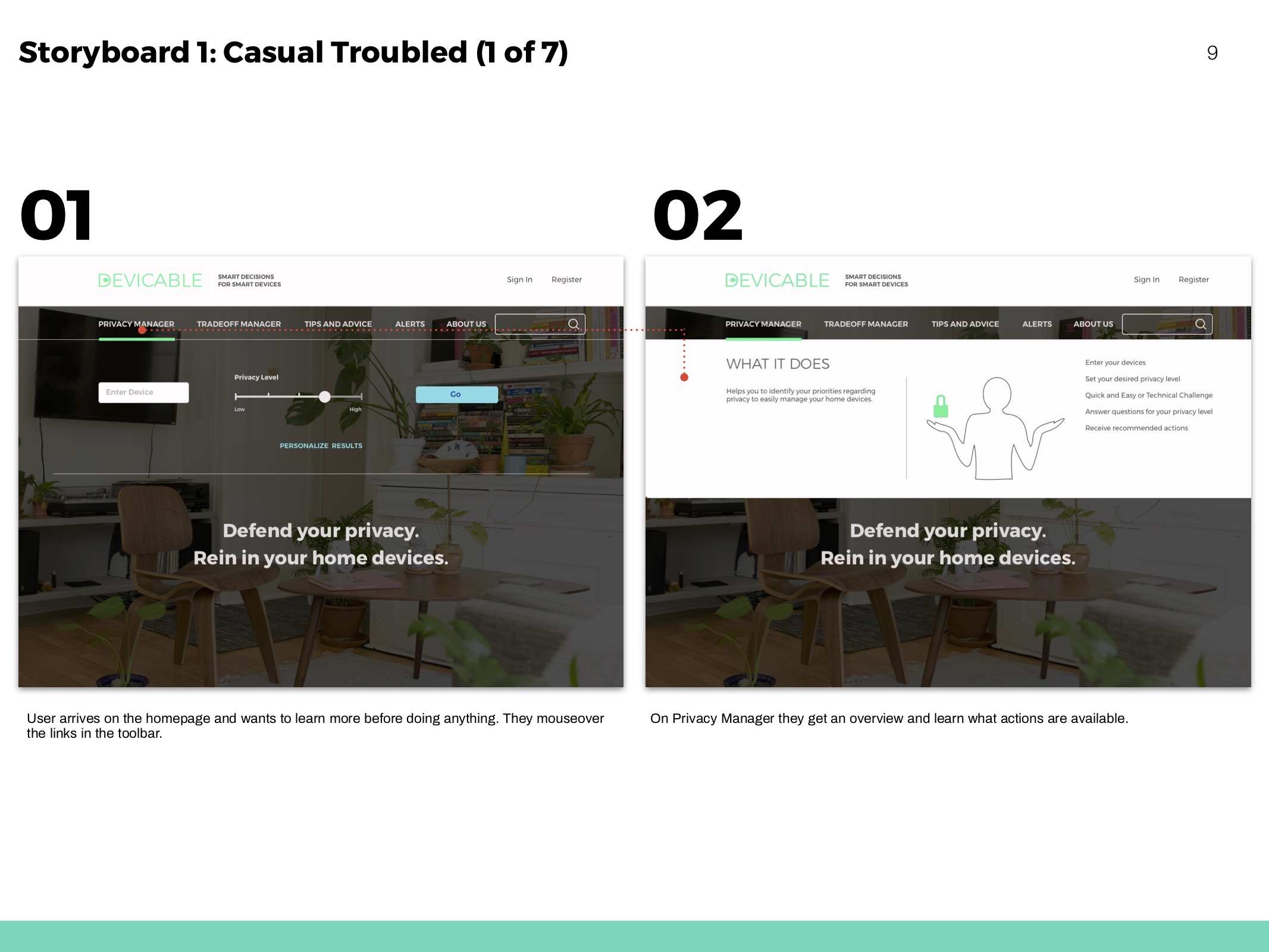

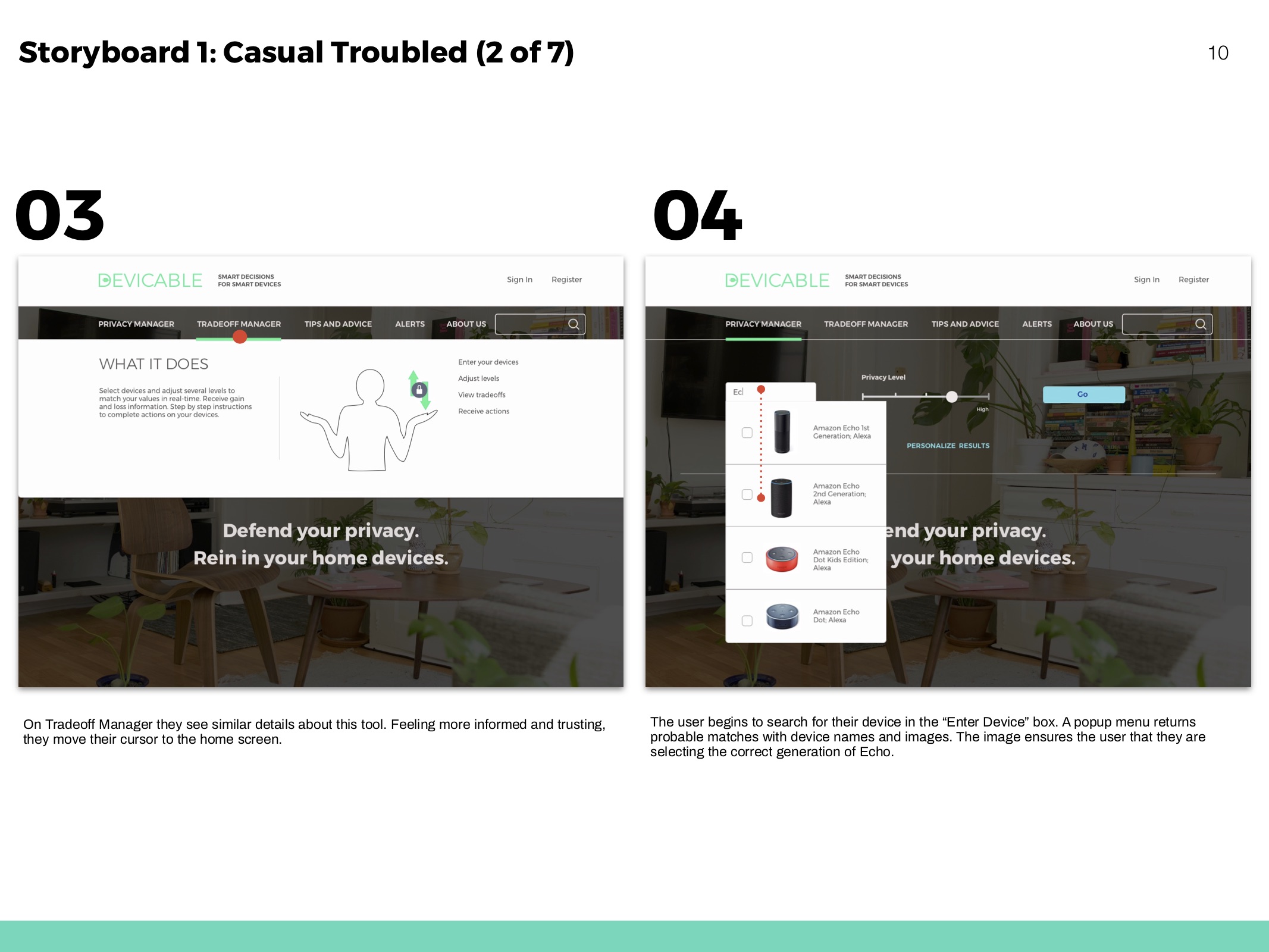

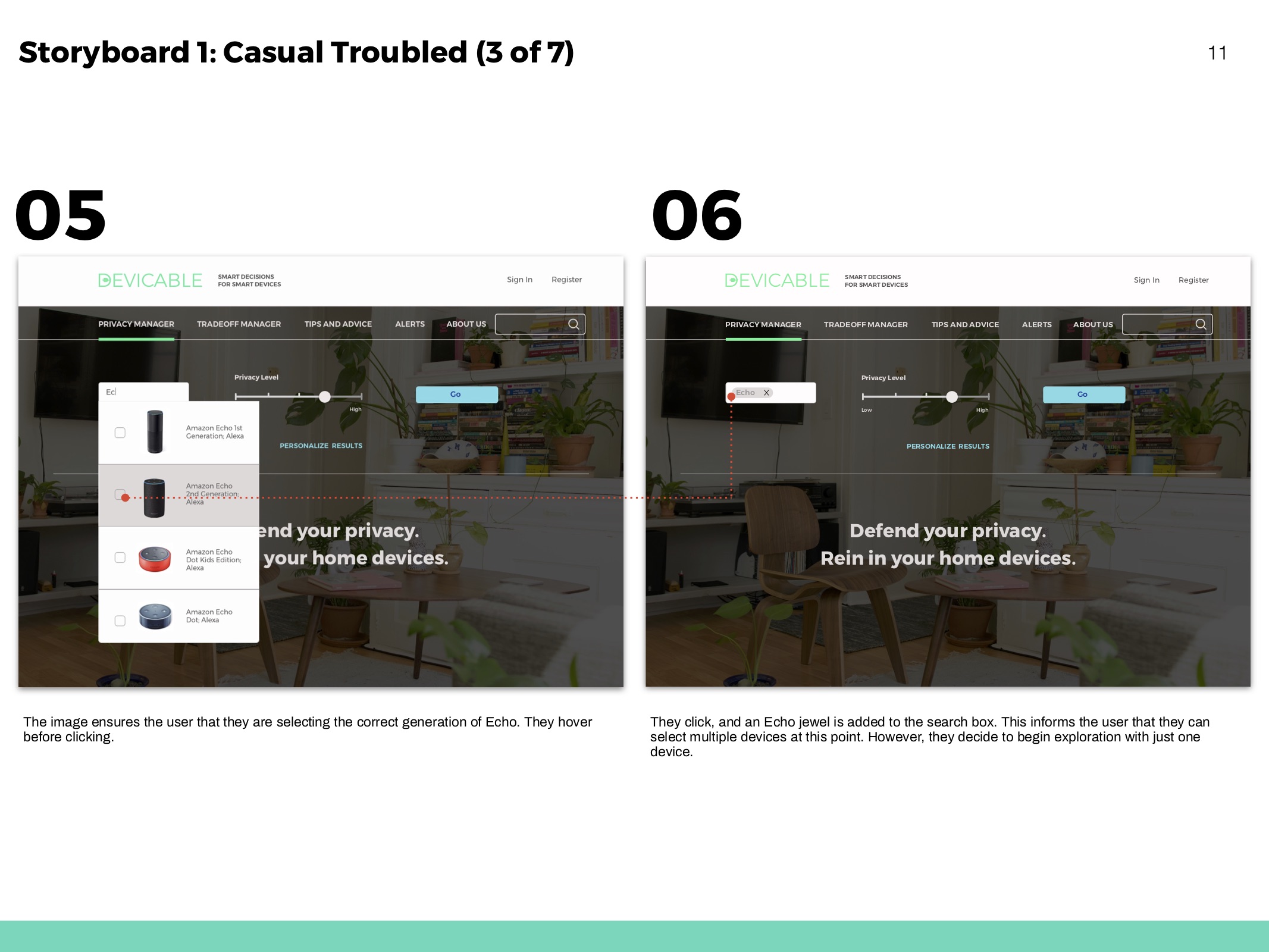

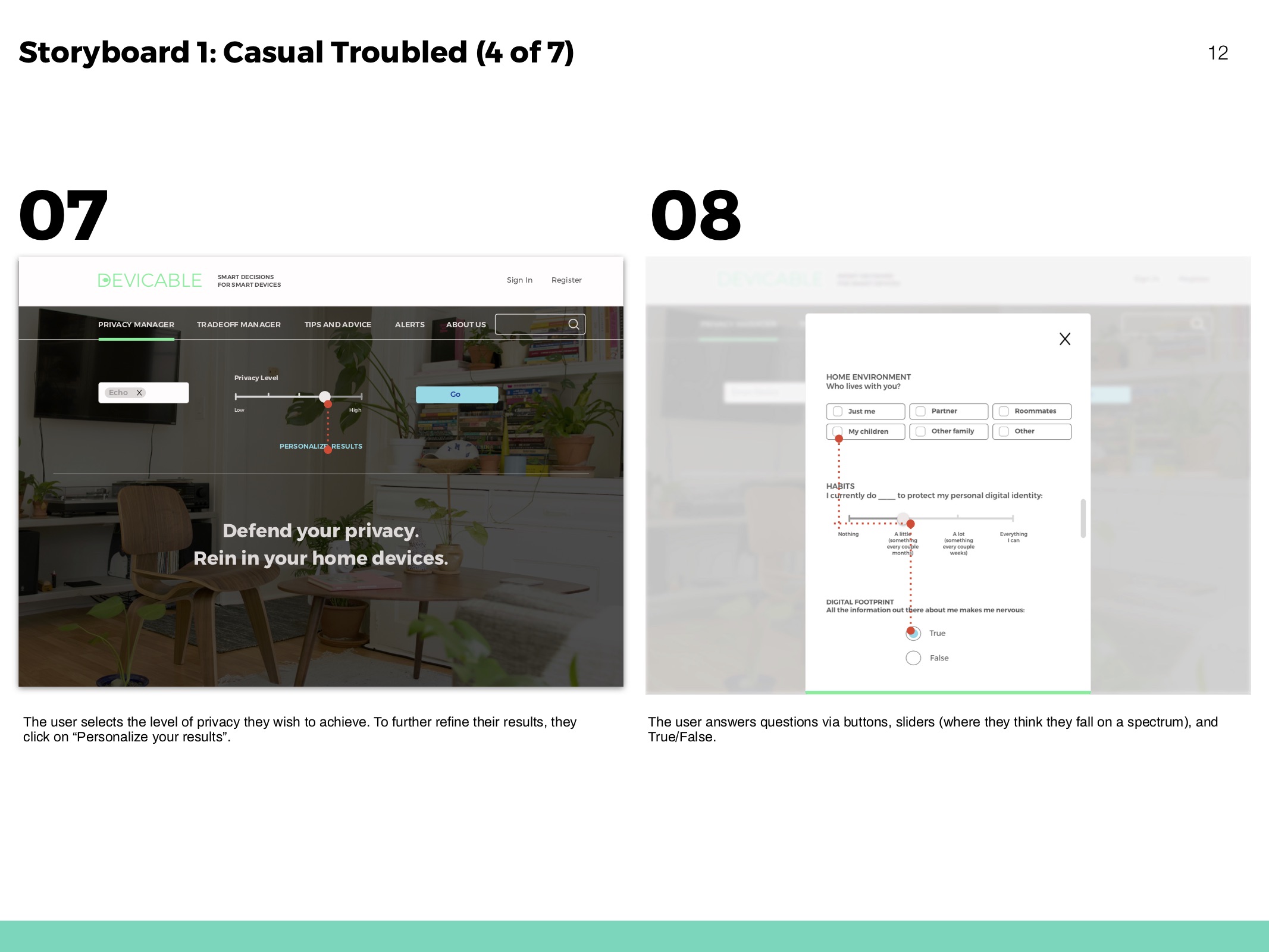

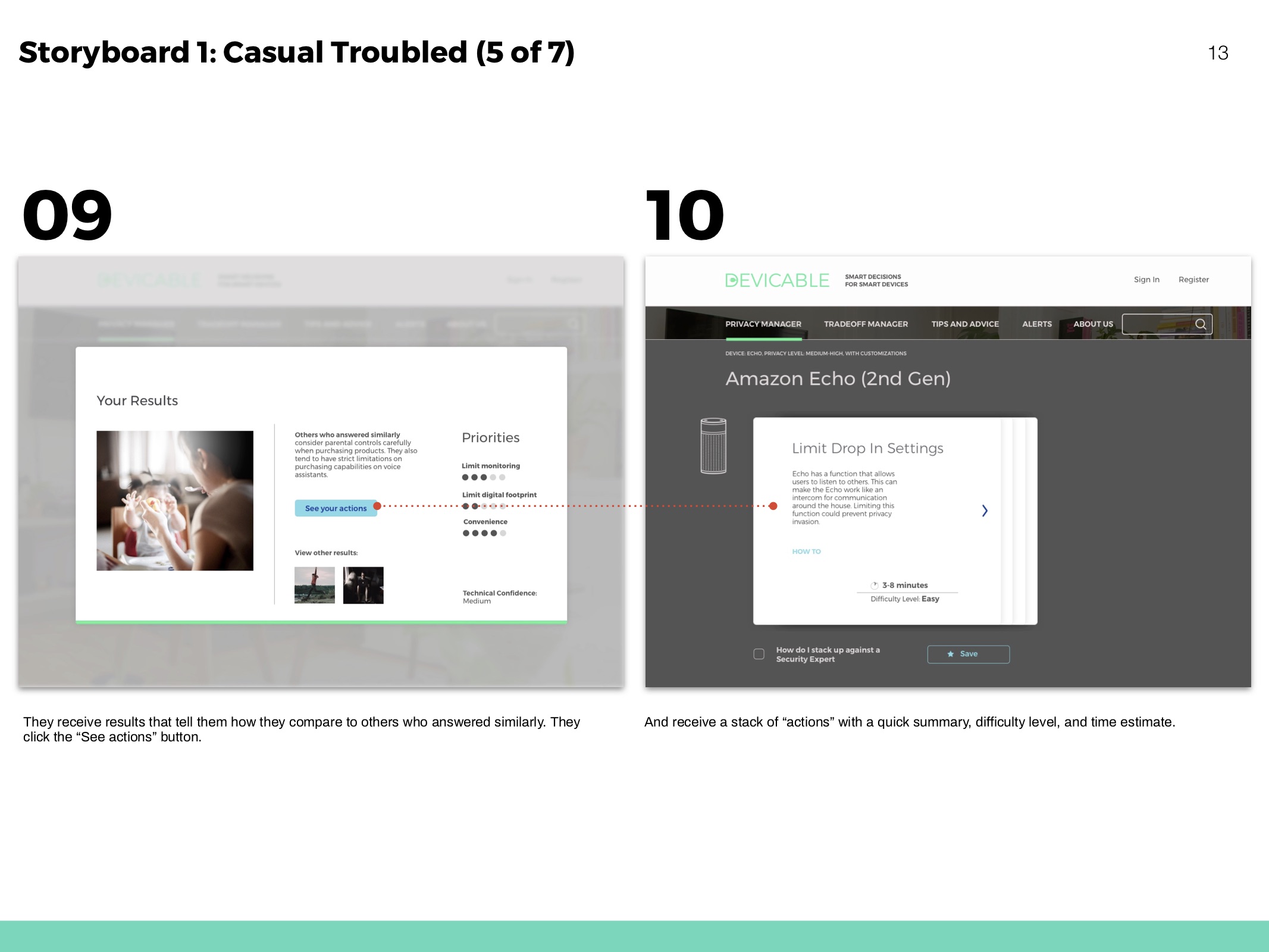

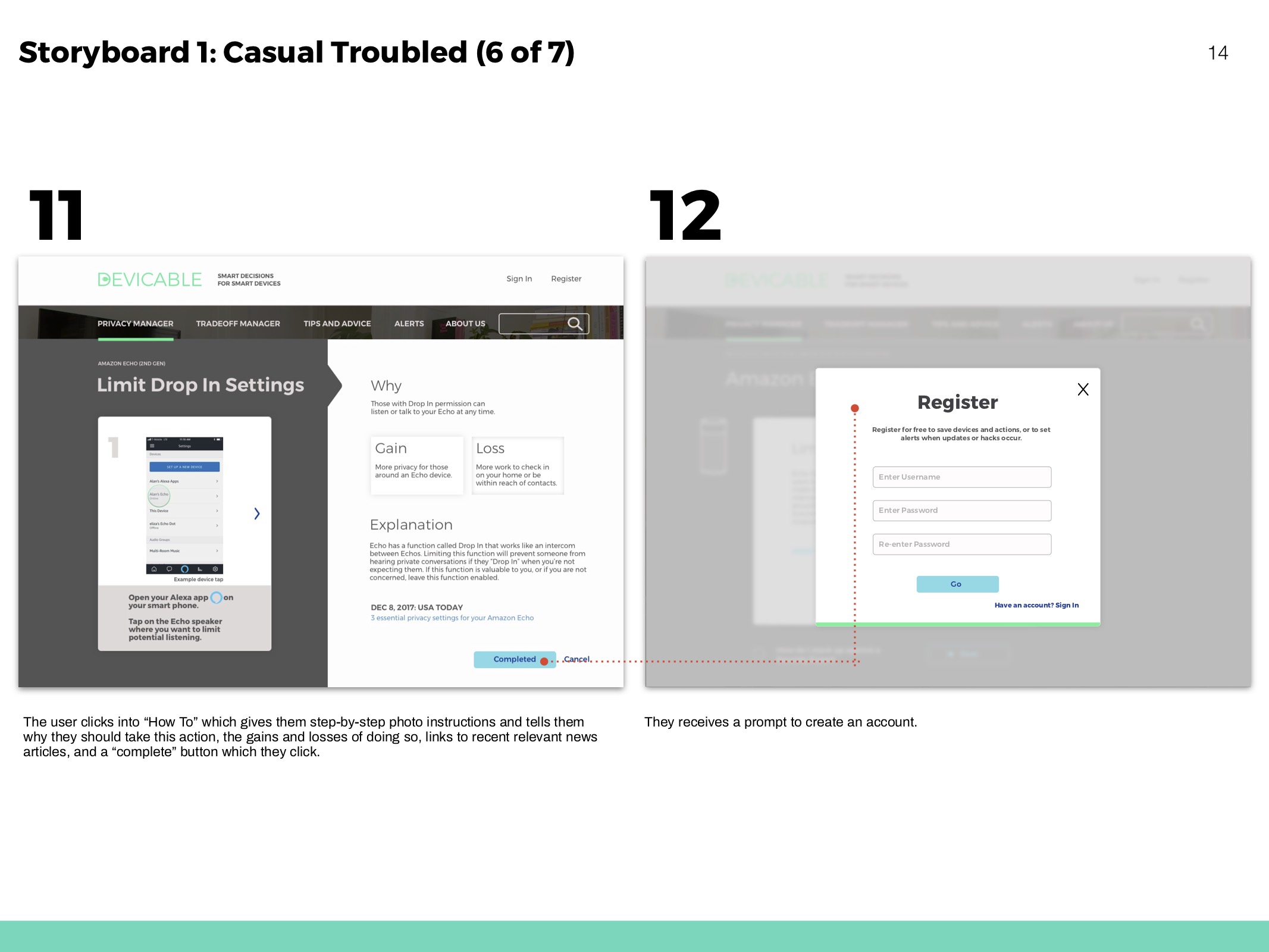

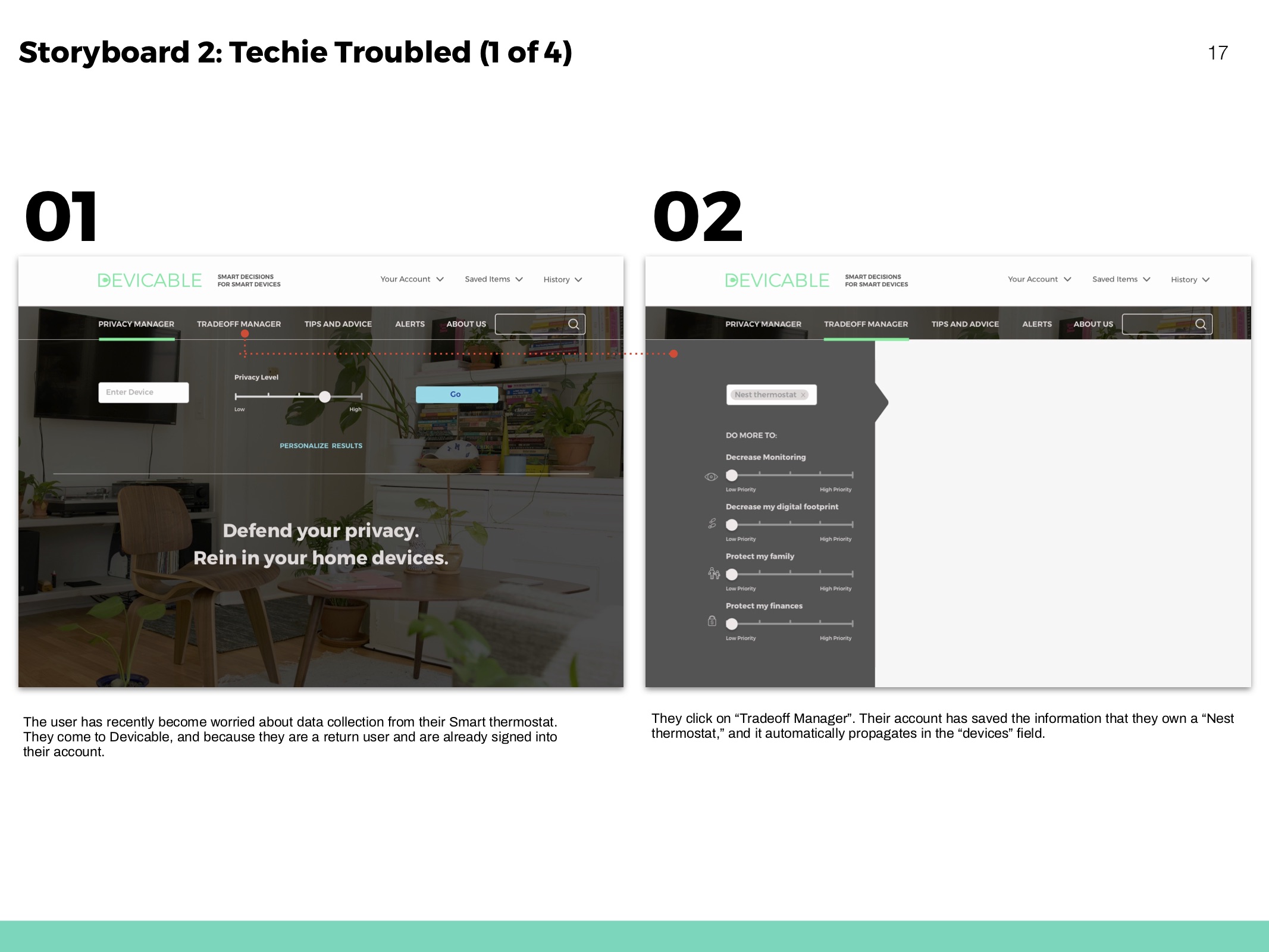

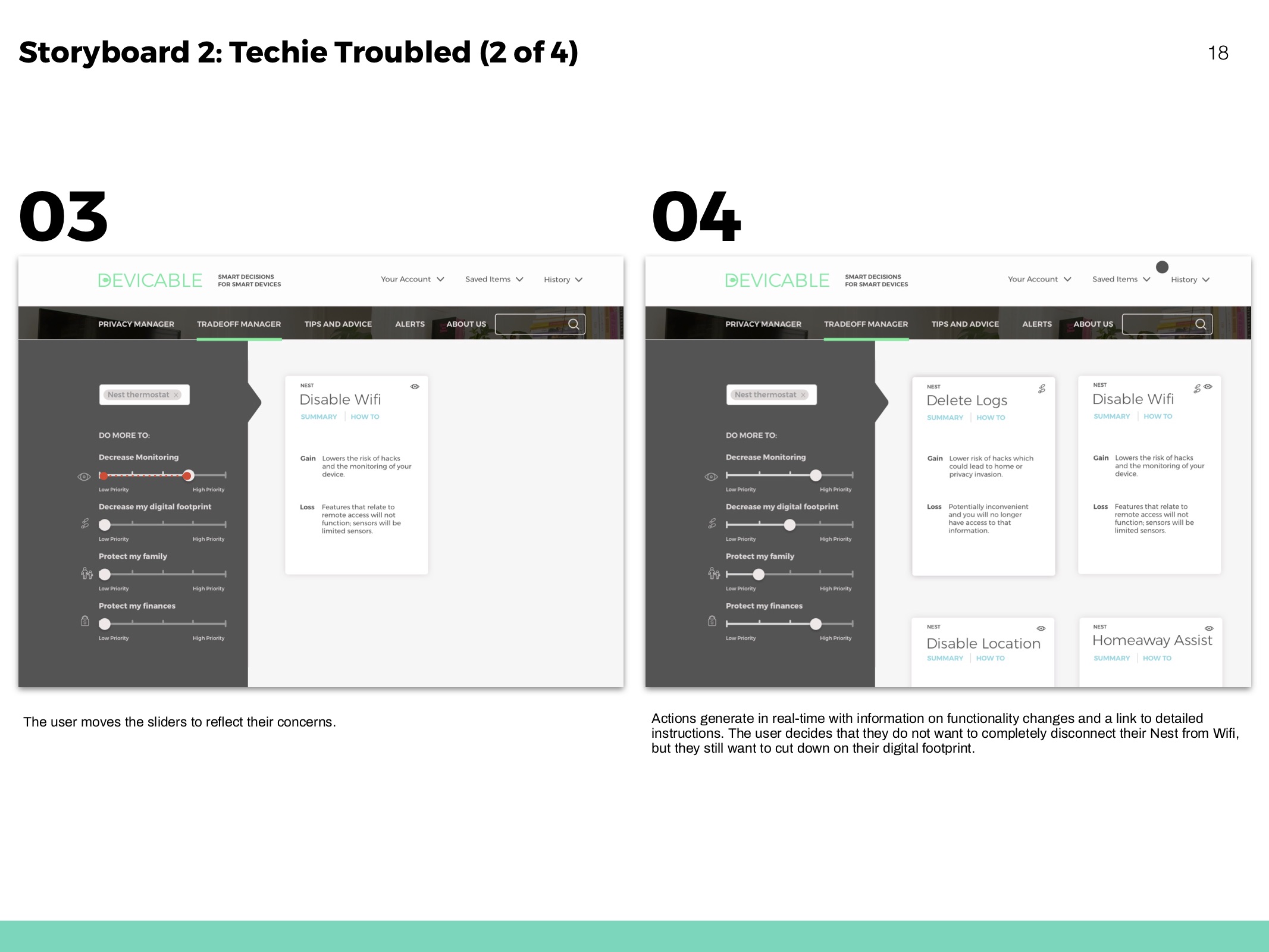

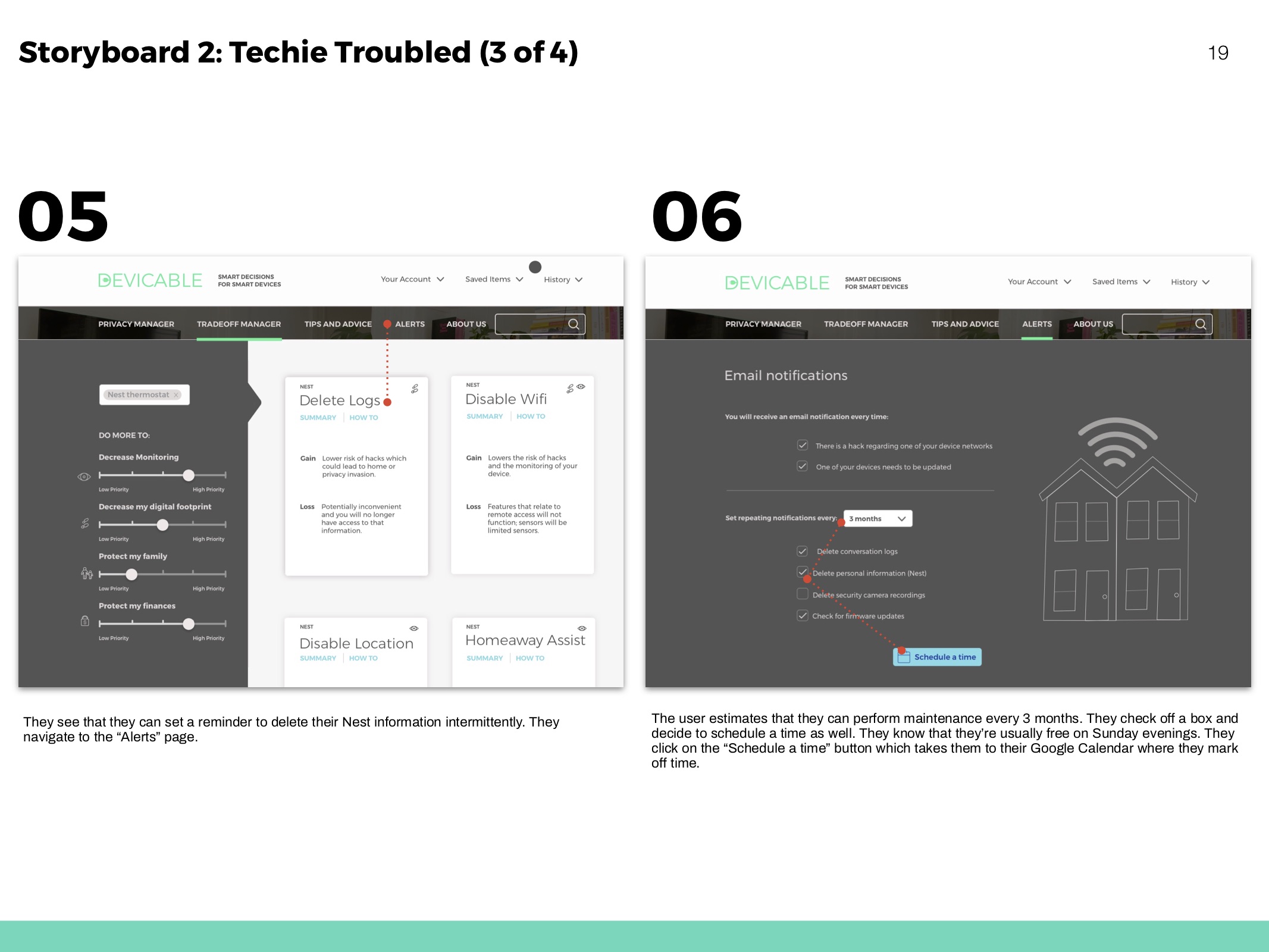

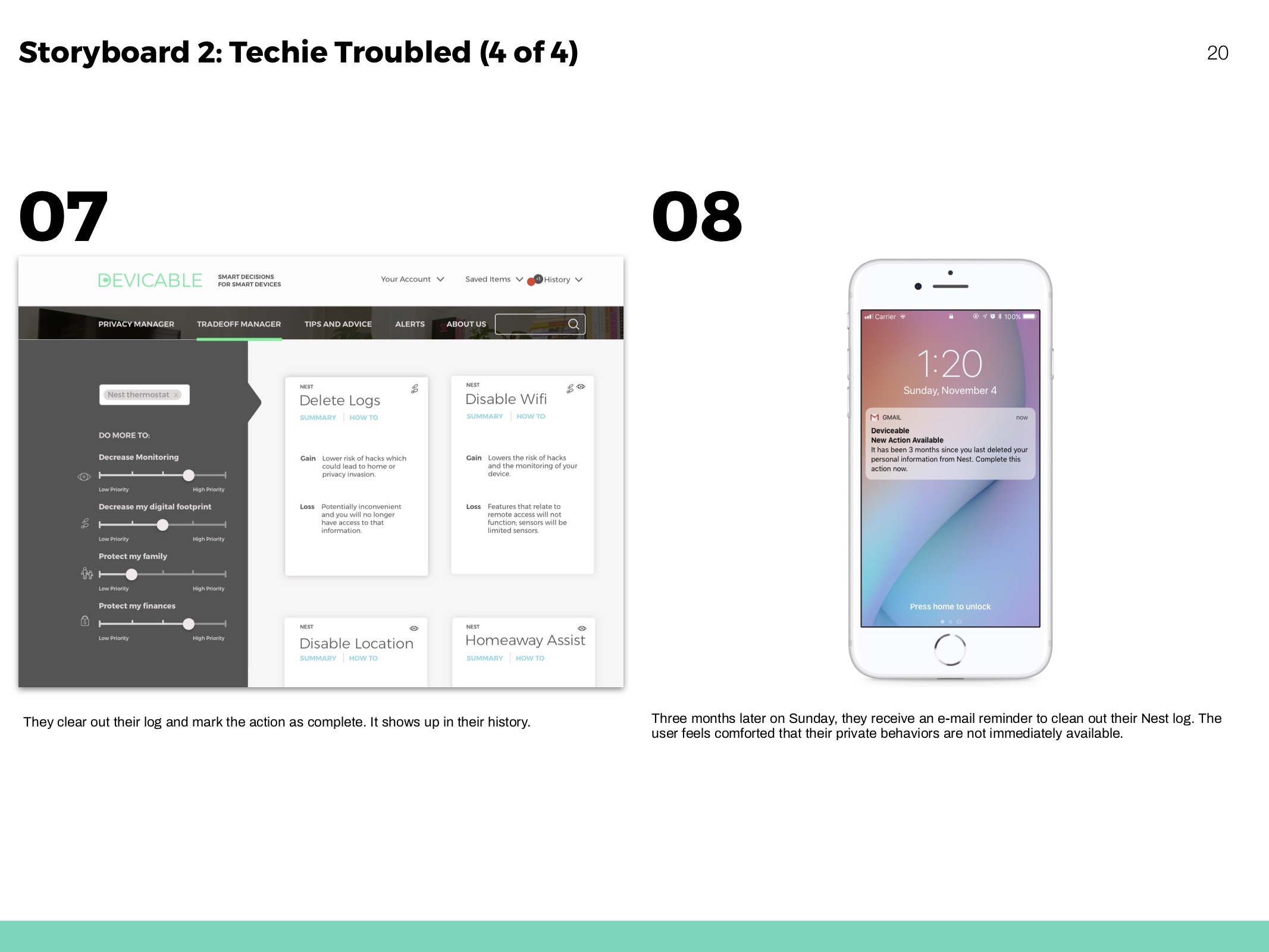

Storyboards

Hero flows based on personas and to show movement throughout the website.

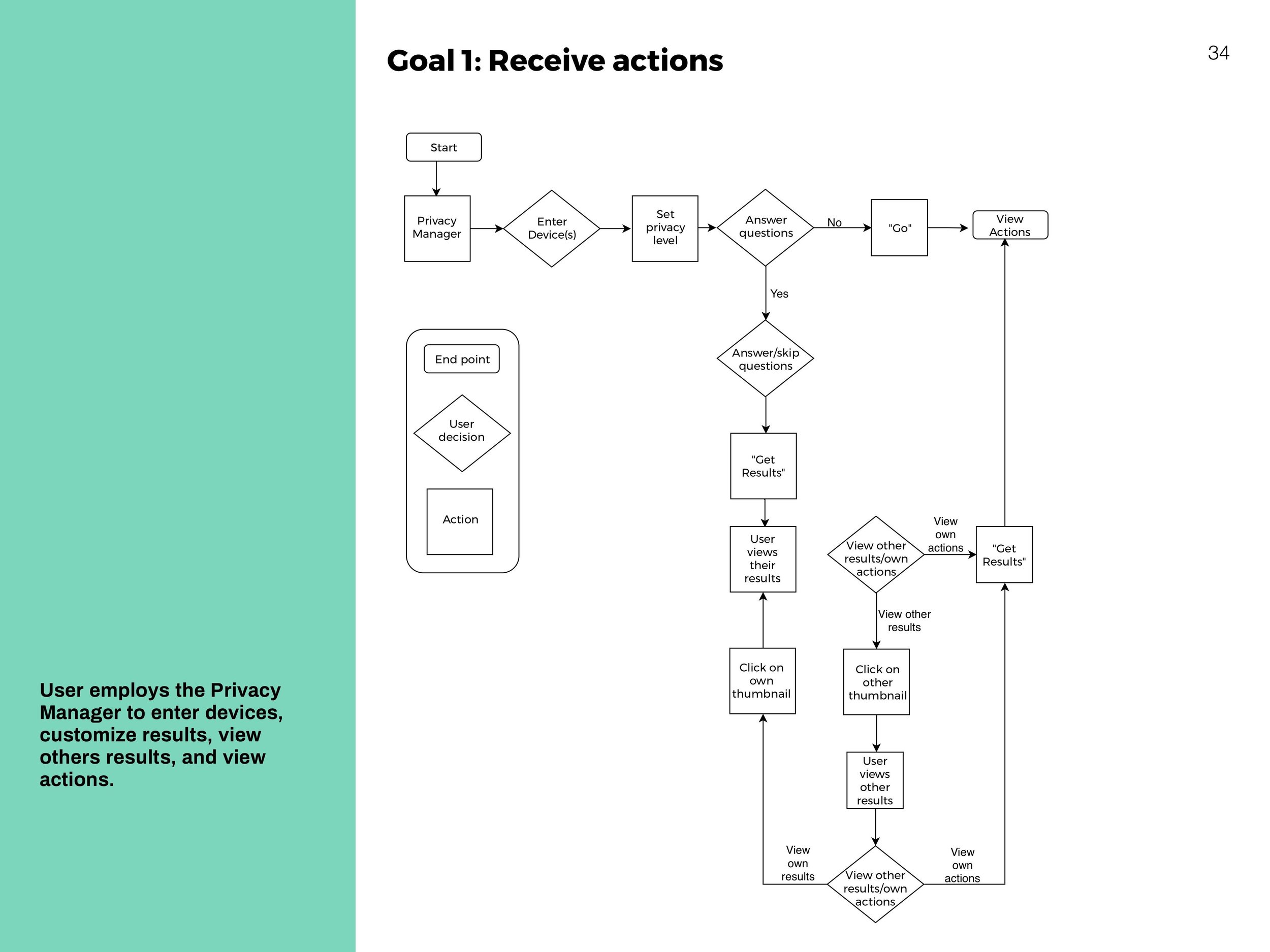

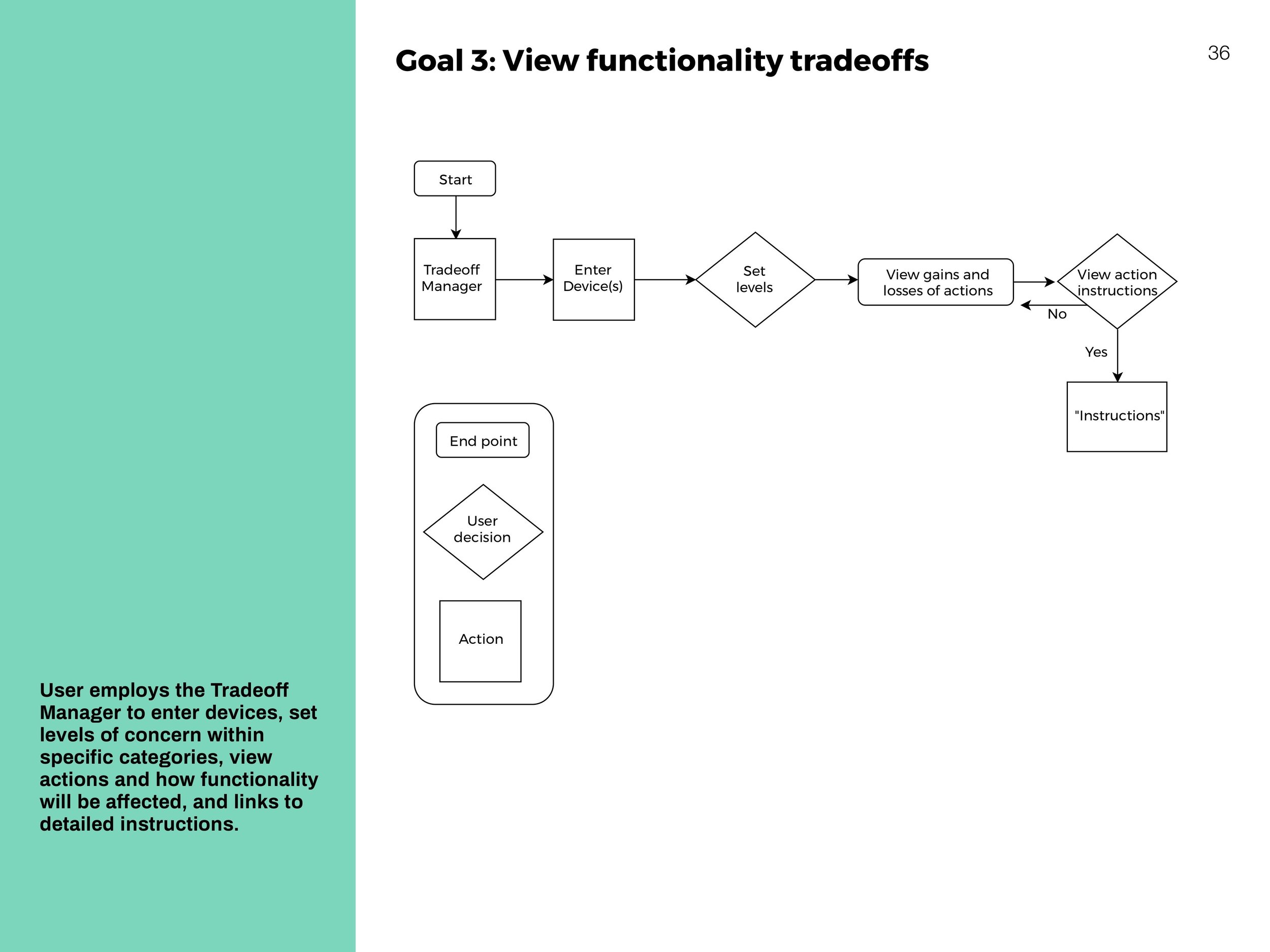

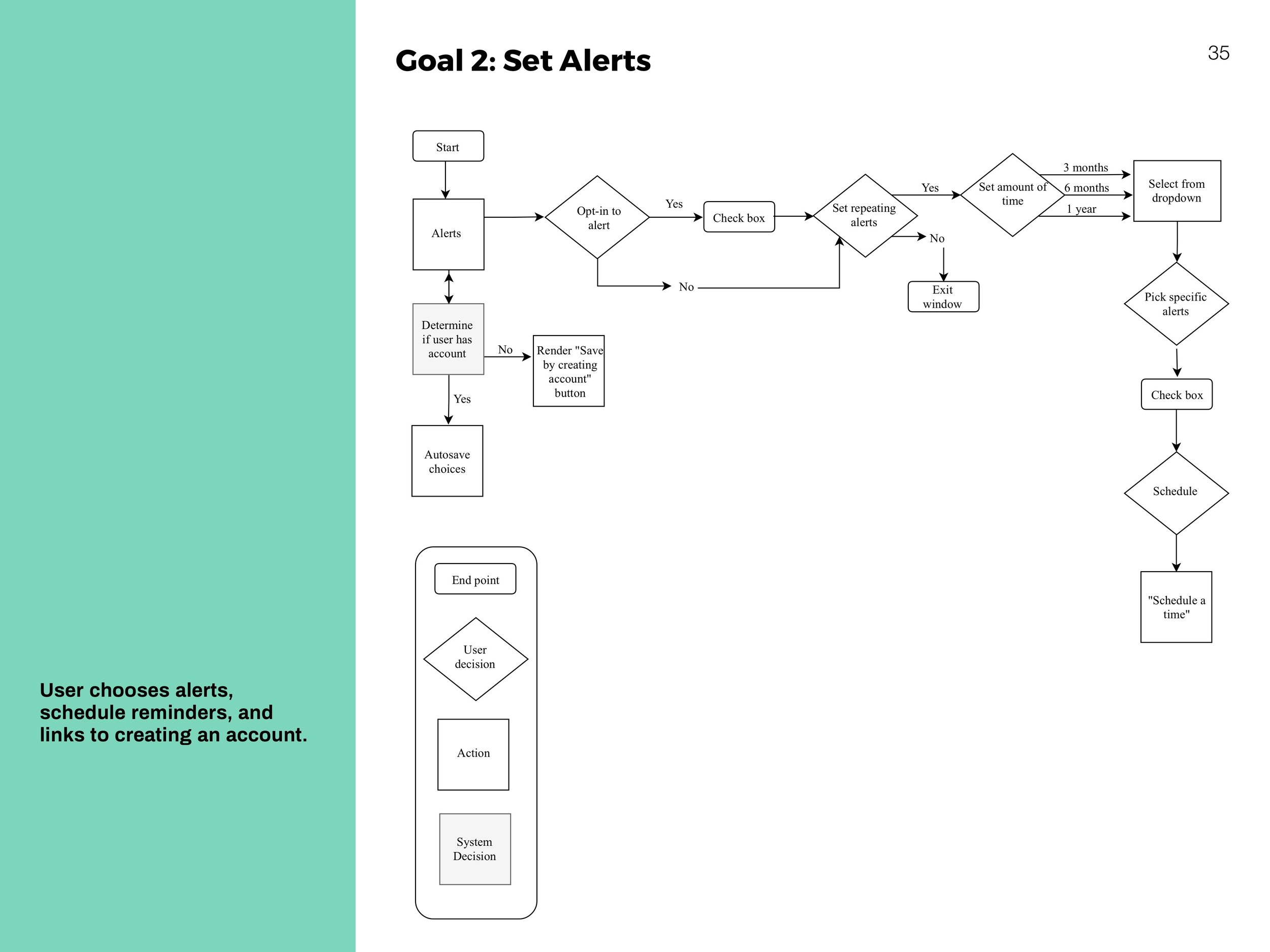

Interaction Flows

System-centric charts to show movement throughout the website.

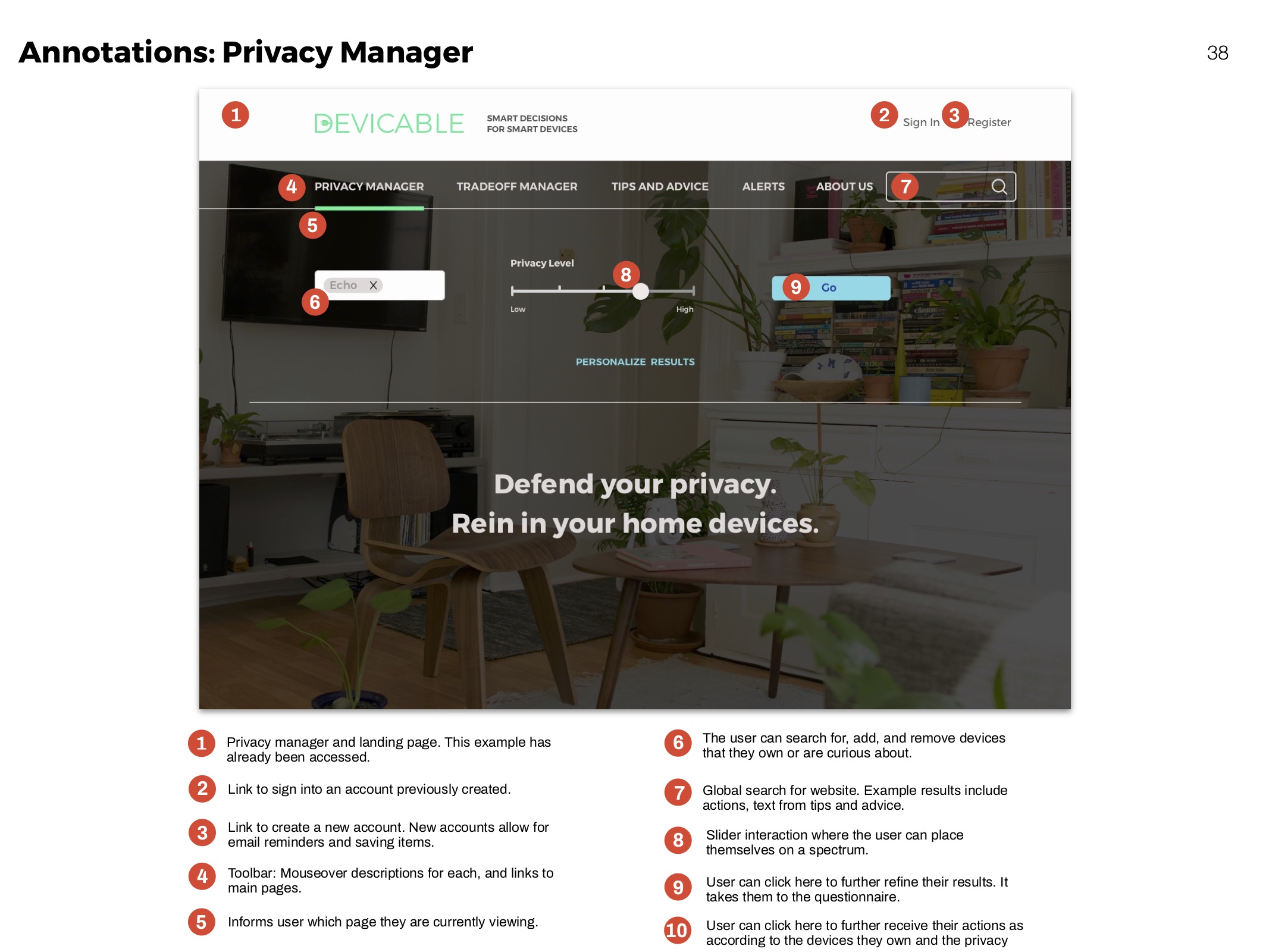

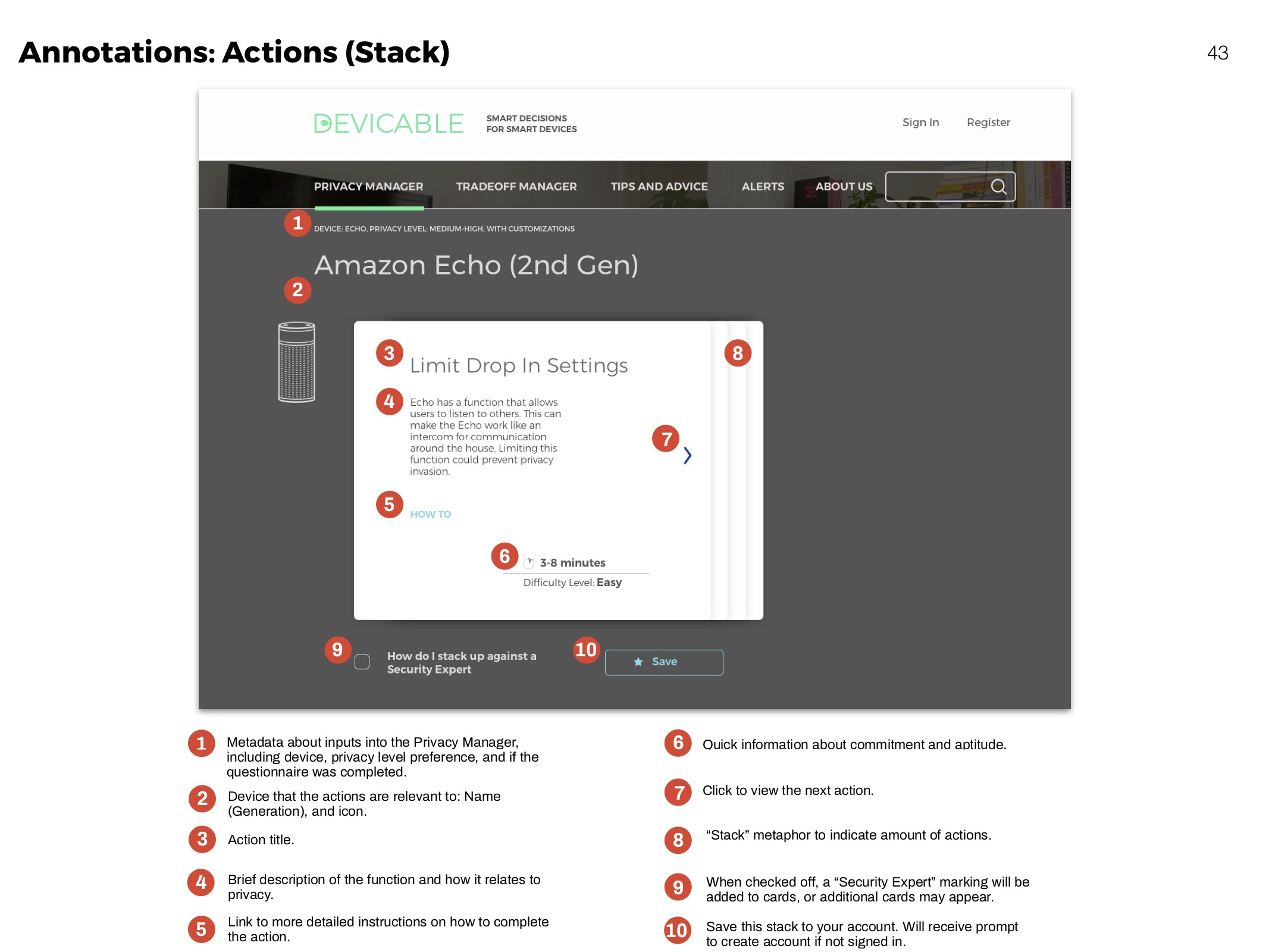

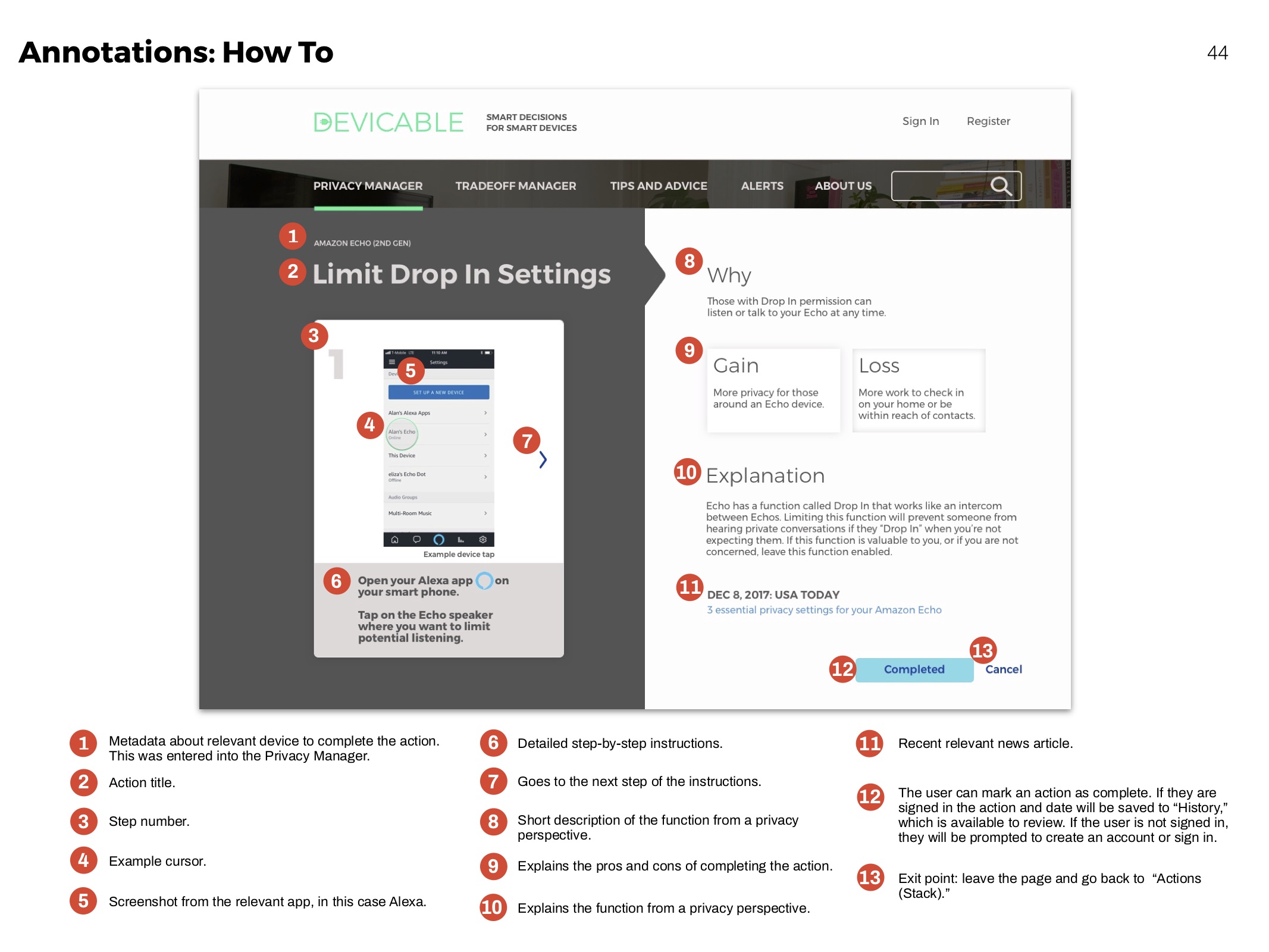

Annotations

Clarifying functionality of elements for development.

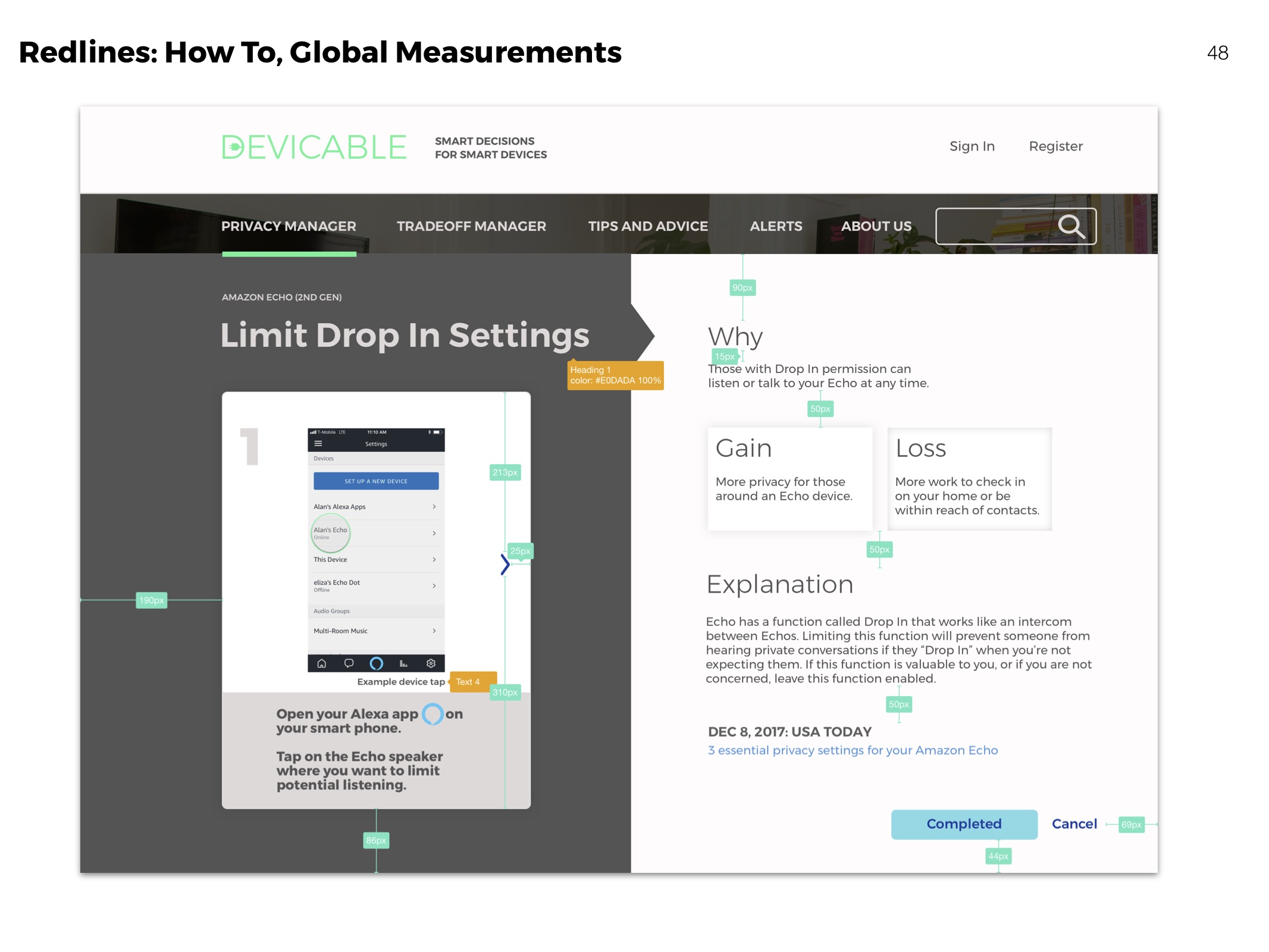

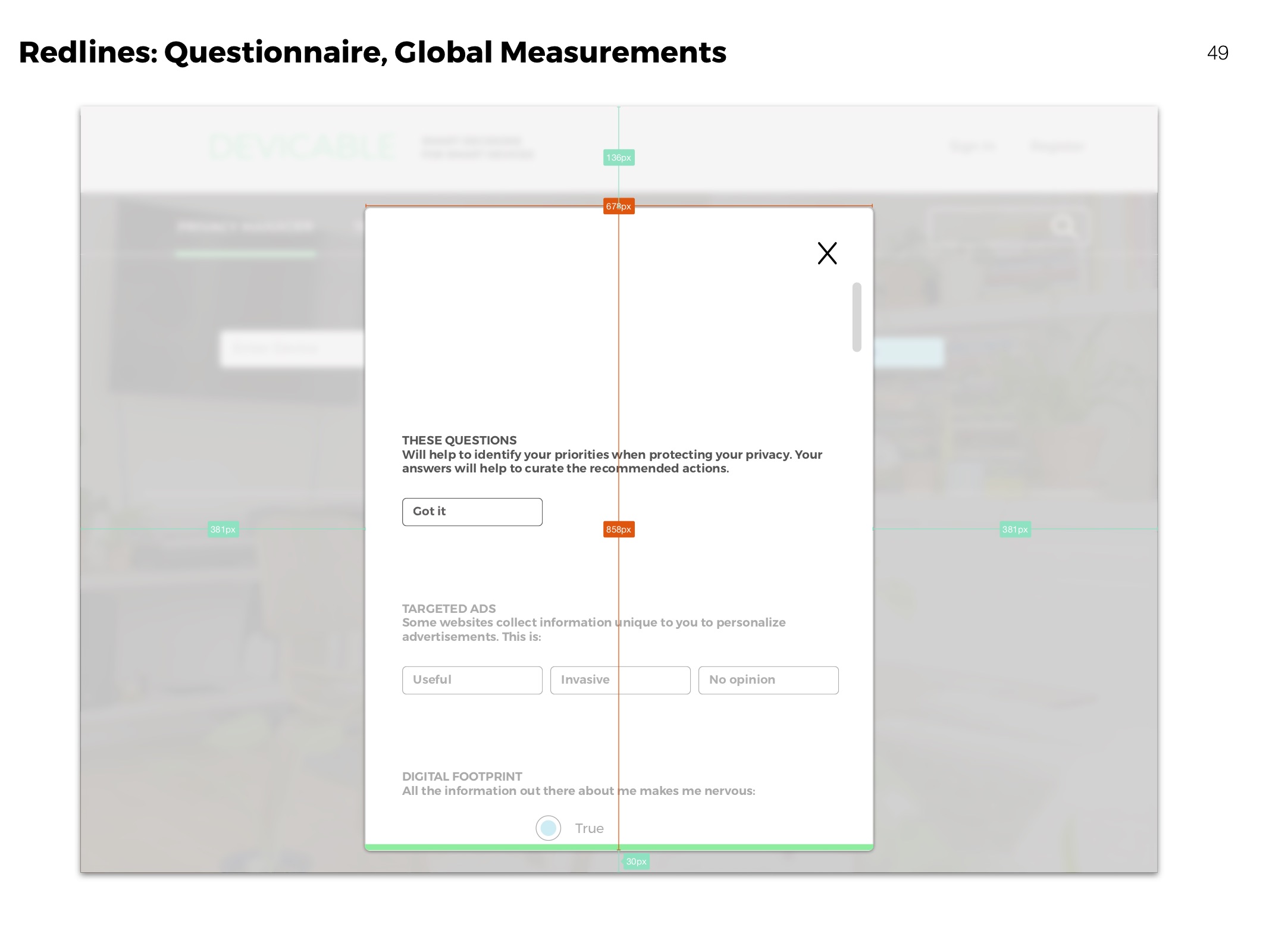

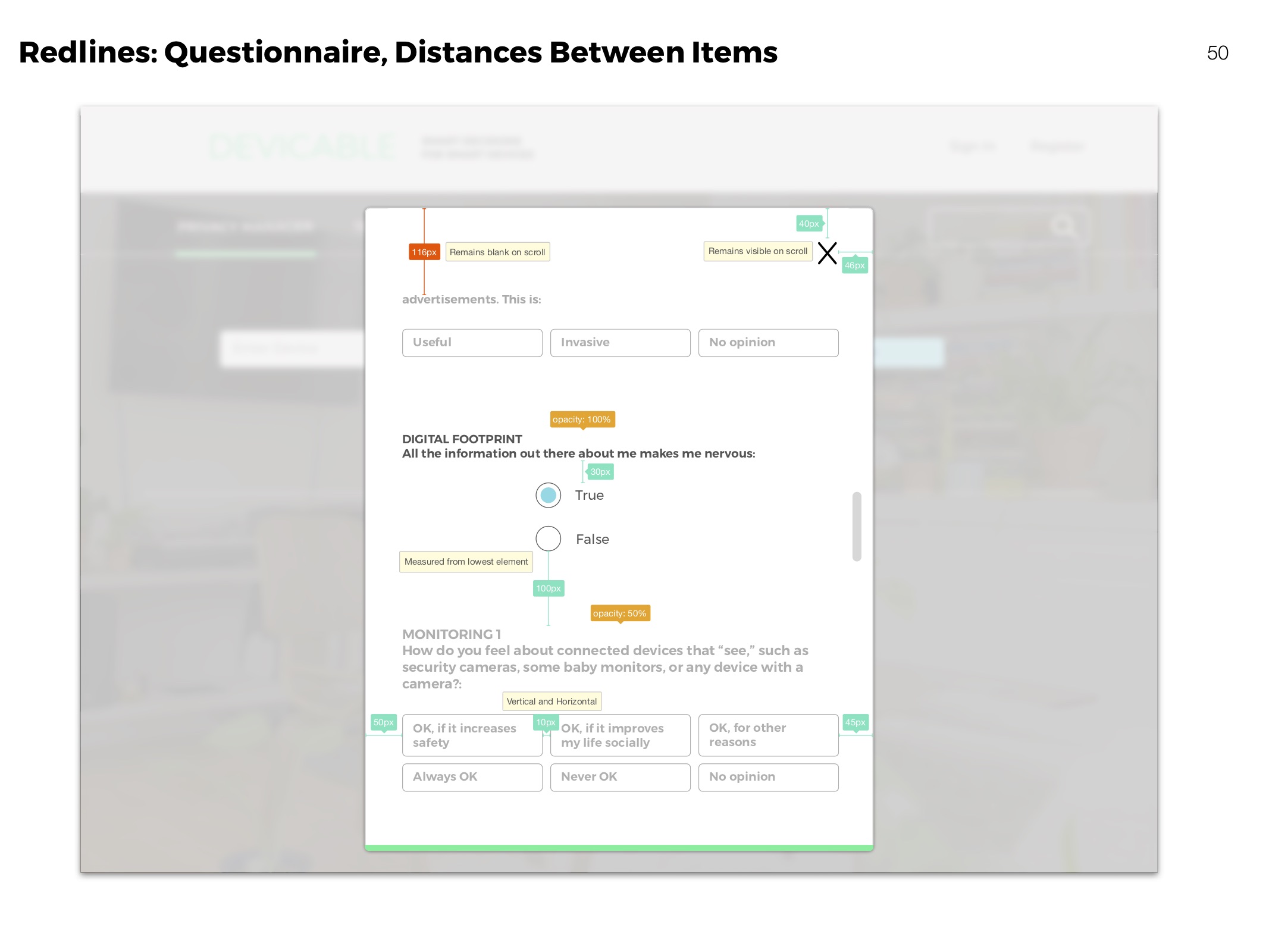

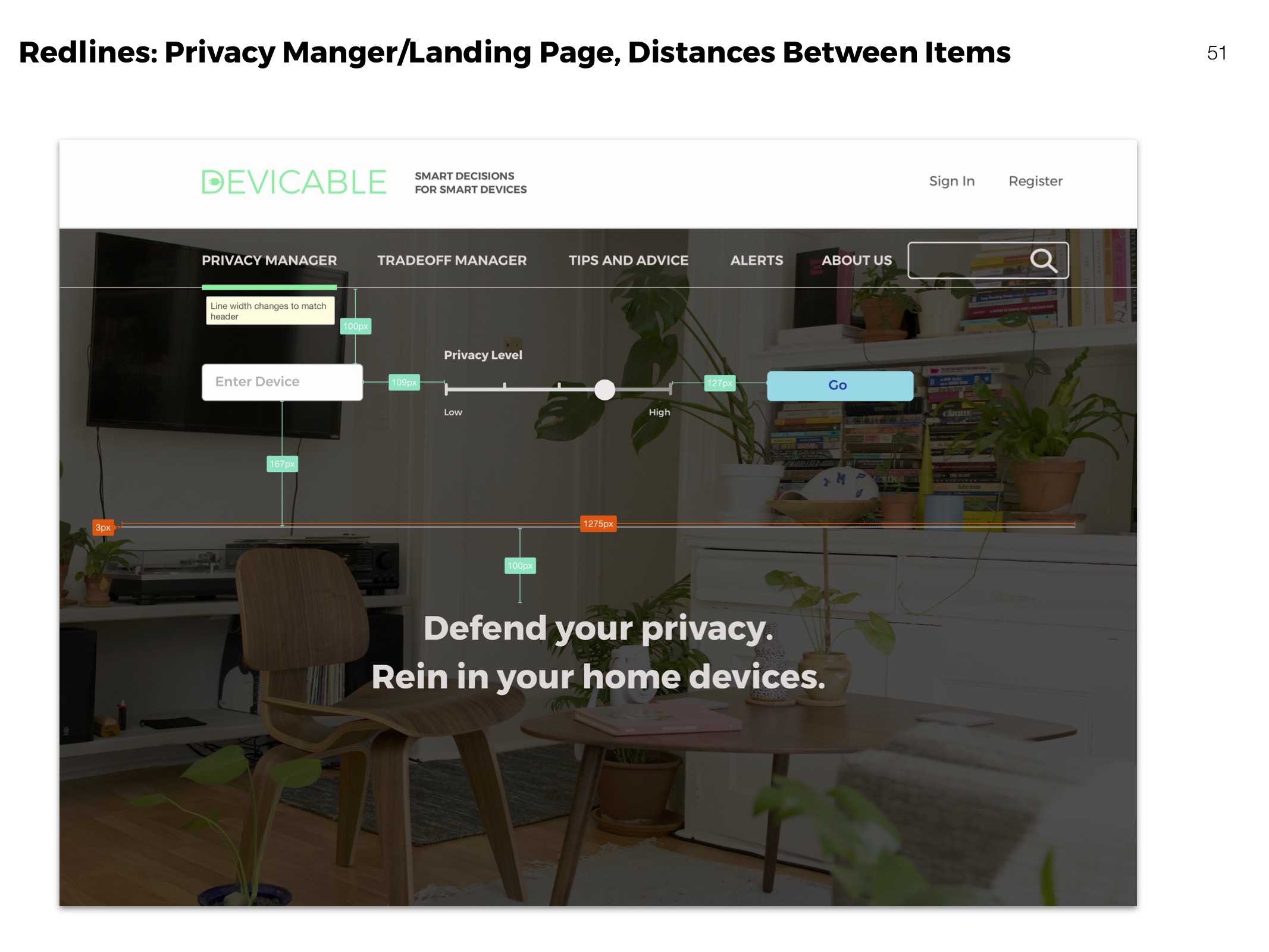

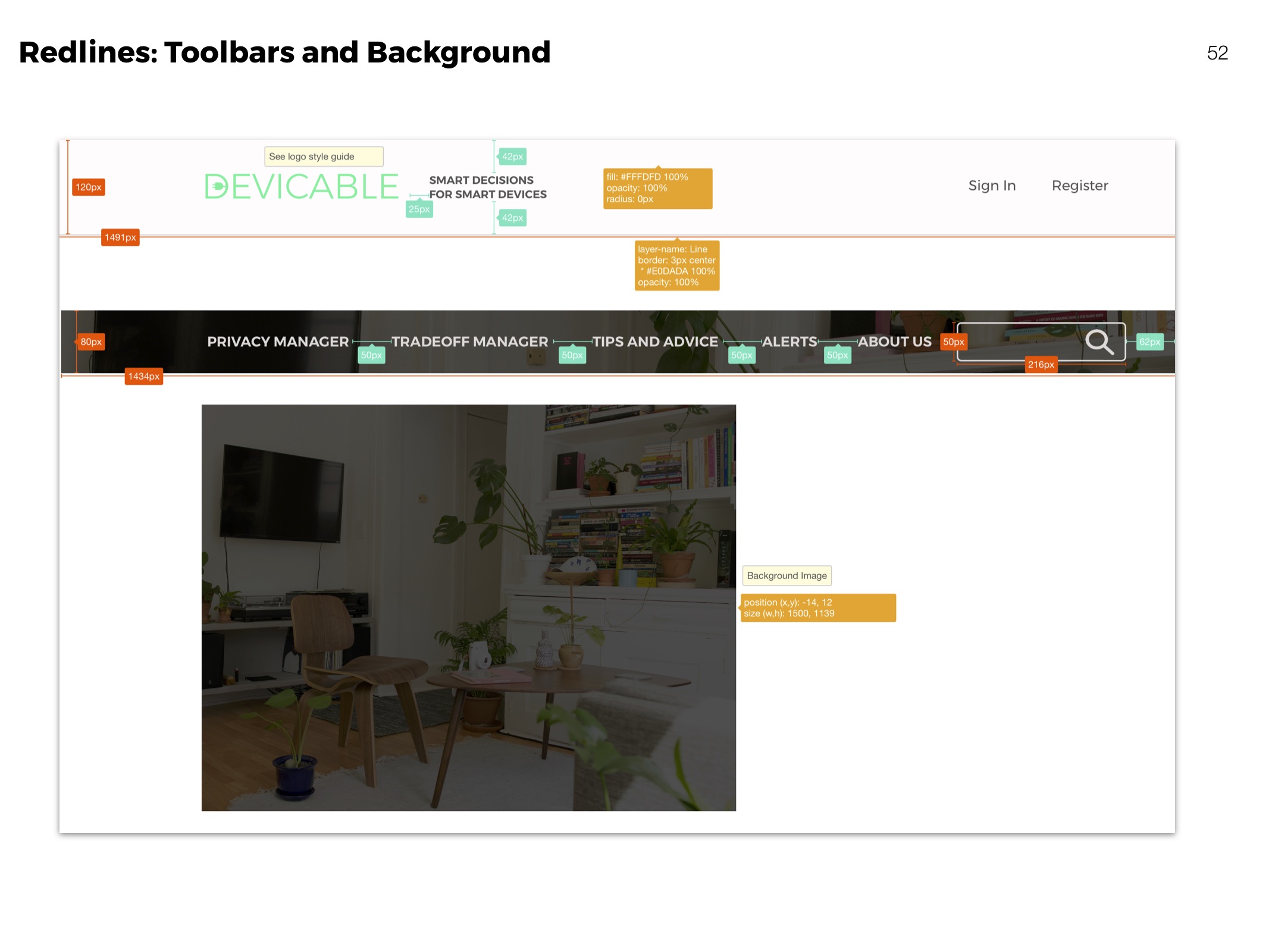

Redlines

Clarifying visual aspects of elements for development.

Reflection

Working on a smaller team empowered me to both learn more and have more influence over the product. The increased responsibility per person meant that I had to learn skills for assets that could have easily been passed off to someone else with more expertise if I was on a larger team. For example visual design, and animations that I executed in Keynote.

Future Directions

Building an updatable information database for actions would be a first step in building Devicable. The survey showed that there is a huge presence of home automation power experts online who love sharing their knowledge. Tapping into these networks could have many advantages, such as verifying changing information with expert sources unaffiliated with companies, and keeping Devicable’s recommendations as up-to-date as possible.

The most viable business plan would be to partner with established organizations and forums such as EFF and Mozilla. Fortunately, there’s a community of responsible IoT and design ethics already. Forging these bonds was started in the research phase but would need to be strengthened.